Uploading Source Code to Be Run on Aws

One night in June, 2017 I stumbled beyond a website called freecodecamp.org. I was a teacher at the time who was looking for a career change. Merely I assumed being a developer was out of my achieve.

After all, I didn't consider myself a math whiz, never went to school for computer science, and felt I was too old to become into coding.

Thankfully, freeCodeCamp helped me accept my beginning steps toward becoming a programmer, and I soon shed each of those self-limiting thoughts.

Now, equally a Samsung software engineer and three-time certified AWS developer, I look back at those nights and weekends I spent working through the freeCodeCamp curriculum and run into them as a major contributor to my successful work transition and my accomplishments as a developer.

With my teacher-heart still present, though, I wrote this mail service to encourage other freeCodeCamp students to continue with their learning every bit well as to supplement their freeCodeCamp curriculum.

Familiarity with deject computing, like AWS, Azure, or Google Cloud, is an increasingly present requirement in job postings.

This post will requite any newbie who is even so working through freeCodeCamp'southward curriculum a simple introduction into the vast globe of deject calculating. I'll show you lot how to have one of your freeCodeCamp front projects and deploy it on AWS in a variety of ways.

Enjoy, and thanks freeCodeCamp!

Prerequisites

The point of this post is to extend your learning from the Front end End Development Libraries Projects portion of the freeCodeCamp curriculum past deploying them to AWS.

And so the prerequisites are:

- stop one of the Front Libraries Projection challenges

- have an AWS account (get here to sign up)

Why Deploy Your Project to AWS?

As you work through the freeCodeCamp curriculum and you cease your Front End Libraries Projects, yous submit those projects via CodePen where freeCodeCamp has a series of unit tests to verify that you correctly completed their user stories.

Confetti falls, you lot see some inspirational phrase, and you lot're probably very proud of your project. You might fifty-fifty want to share that accomplishment with your friends and family unit.

Sure, you could share your CodePen link with them, but in the real world when a company finishes a project they don't brand information technology available via CodePen – they deploy it. So, let's practise that too!

Just a quick note: when I say "deploy", I hateful taking your lawmaking from a local environment (your reckoner, or the freeCodeCamp or CodePen editors) and putting it on a server. That server, which is just some other computer, is networked in such a way for the world to admission your site.

If you wanted to, you could gear up up a pc in your dwelling house and do the proper networking for it to serve your project to the globe. Or, you could subscribe to a hosting company for them to serve your website for you.

At that place are a diversity of approaches to deploy code, merely one very popular method is to employ a deject computing platform like Amazon Web Services (AWS). More on that next.

What is AWS? A Brief Intro to the Cloud

Amazon Web Services (AWS) offers a "cloud computing platform". That's a petty jargon with a lot packed into it. Let's unpack.

AWS has services ranging from simply storing files, running servers, converting speech to text or text to speech, machine learning, individual cloud networks, and about 200+ other services.

The bones idea behind cloud computing is to gain on-demand Information technology resources. The alternative, like nosotros talked nigh before, is for you to ain those Information technology resources.

There are a number of benefits to using a cloud calculating platform instead of owning, 1 of the main ones being the cost savings.

Imagine trying to pay for all the concrete IT resources that it would take to run the 200+ services AWS offers. That upfront cost is something most companies cannot afford, and fewer could pay for the engineers to configure.

Also, since the resources are on-need, cloud platforms allows you to launch resources much faster than an IT section could.

In short, with a cloud calculating platform like AWS you save on price and deployment time, plus many more benefits not the least of which is security. Needless to say, cloud computing is the new sexy approach to It and AWS is leading the pack.

Why does this matter to you? If you intend to pursue a career in development, regardless of your focus (backend, frontend, web technologies, mobile apps, gaming, desktop applications, and and then on) y'all will find that many task postings include a reference to cloud compute platform feel.

Then the more you lot can become familiar with one, like AWS, the more yous'll split yourself from other candidates.

How to Deploy your freeCodeCamp Project with AWS S3

What is AWS S3?

Throughout this postal service we'll deploy your freeCodeCamp front project using various services on AWS.

Our first approach is through the AWS service called S3. S3 stands for Simple Storage Solution. As you might have guessed from that proper name, the service lets you simply store stuff, more specifically objects.

You can find tutorials and courses on S3 that are hours and days long. On the surface though, it'southward just a place where you tin store objects. Objects can exist things like image files, video files, even HTML, CSS, and JavaScript files.

As you lot dive deeper into S3, you'll acquire that S3 allows yous to practice a lot of things to and with those objects. Only for our projection, we just want to larn how to store objects and await at a single 1 of those deeper features – using S3 every bit a website hosting service.

Thats right, AWS S3 is a service nosotros tin can utilize to deploy a website.

How to Get Your Project Ready for Deployment

Throughout this mail service, I'll exist using the Random Quote Machine project. I am using the code from the example provided in the instructions.

We demand to take your CSS, HTML, and JS code from CodePen and put them into their own separate files in a text editor.

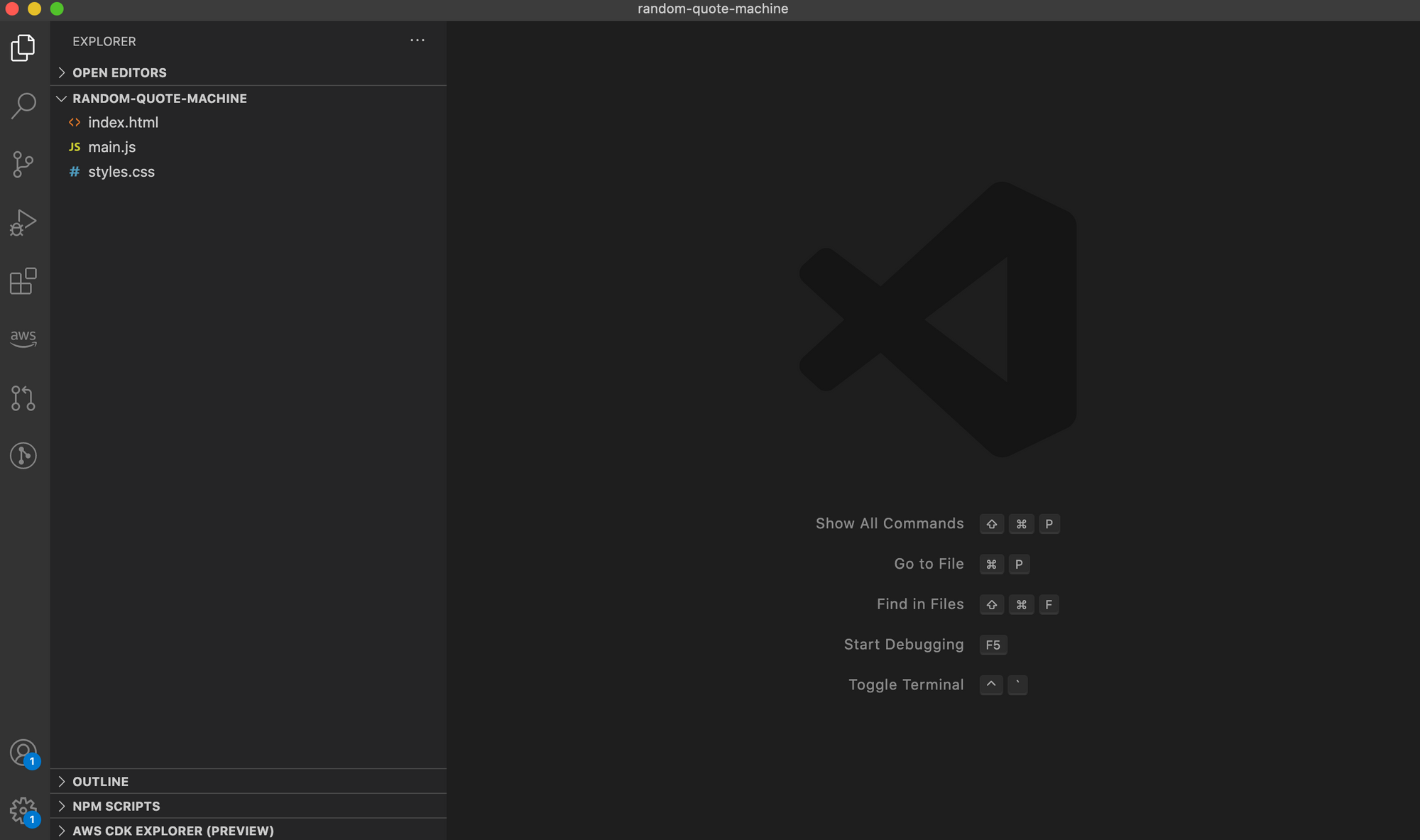

Open Your IDE (for example, Visual Studio Code)

In the CodePen environment, CodePen links your CSS to your HTML code, also equally your JS file to your HTML. We demand to account for this before we deploy to S3, so nosotros are going to test locally beginning.

Open your editor of pick, and create a new directory (binder) to hold your three CodePen files. I chosen mine random-quote-machine . Side by side, create three new files:

- index.html

- styles.css

- main.js

Get ahead and copy your CodePen HTML file into alphabetize.html, CodePen JS file into main.js, and CodePen CSS file into styles.css.

Cheque your <trunk></body> tags

CodePen does not require the <body> tag, only it'southward good exercise to add that. Brand sure your HTML content has 1. Await back at your fCC curriculum if you don't remember where these become.

Add <link> and <src>

Y'all will now need to link your styles.css and chief.js to your index.html, since CodePen no longer does it for y'all.

In a higher place your <trunk> opening tag, add <link rel="stylesheet" href="way.css" /> which volition make your css in styles.css accept effect.

Below your <body> closing tag add together <script src="main.js"></script> which will link your JavaScript in main.js file accessibly to index.html.

Verify that Your Site Still Works Locally

It'due south fourth dimension to test that our lawmaking is working. Open a web browser, and then type ctrl+o to select a local file to display in the browser. Navigate to your folder with our three files, and then double click on index.html to open it.

If everything is working, dandy! It wasn't for me though. The lawmaking I used had SASS styling, which I needed to make some adjustments to.

If you imported any libraries via CodePen, those would need to be imported, likely through the CDN. A simple Google search for those libraries' documentation should help you to find out how to import them.

Make whatsoever changes you find necessary to get your project in working order, but remember, the purpose of this exercise is to learn virtually deploying a website on AWS S3. And so if you're getting bogged down with something small but the site mostly works, continue on with this tutorial to keep the momentum going and then at a later time resolve whatever CSS or JS issues you have.

Once your site works locally, even if not 100% to your liking, permit's become into AWS.

How to Piece of work with the S3 Management Panel

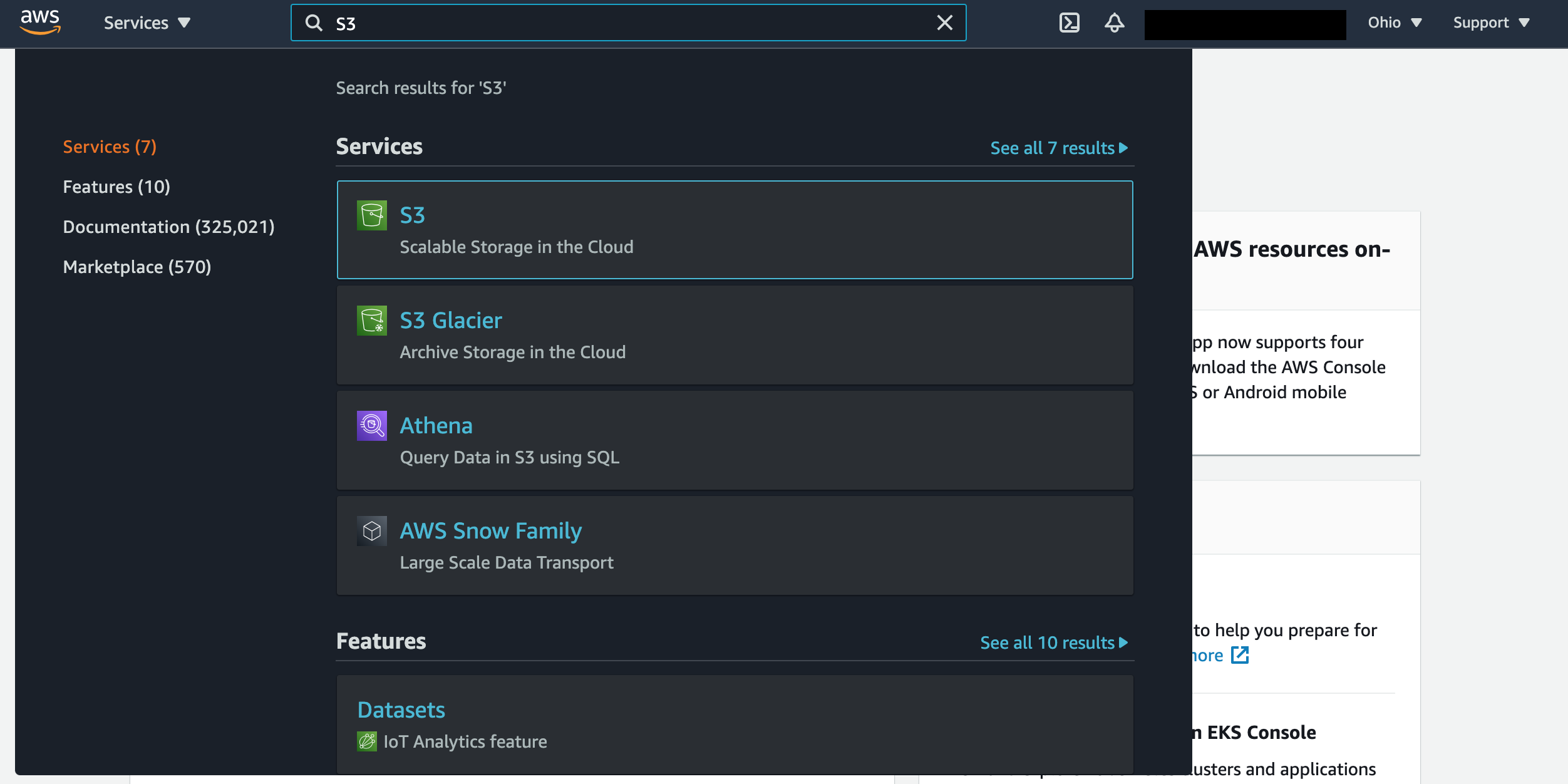

After logging into AWS, in the top search bar blazon "S3" and then select the pick that says "S3", not "S3 Glacier".

Alternatively, instead of the search bar you could expand the "Services" dropdown and enjoy looking at all the AWS service offerings. Either style, permit'south click on S3.

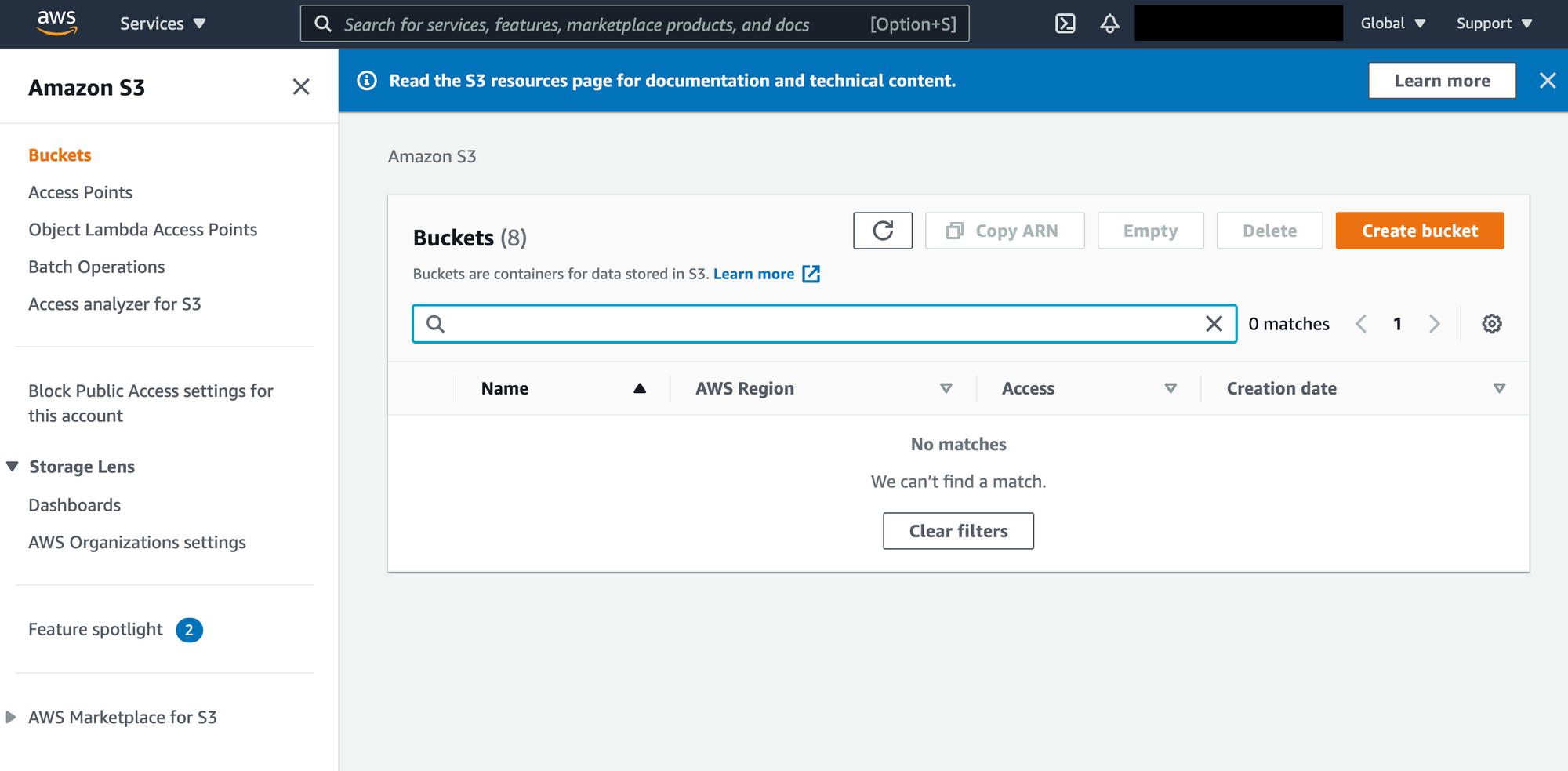

You should now run across the S3 Management Console. Something like this…

This is what AWS calls the Management Console. It'due south the website interface to interact with AWS and create resource and services.

There is a Management Panel for all of the AWS services, but this console is not the only way you lot tin collaborate with AWS. There is likewise a CLI (command line interface), which allows you lot to script to AWS what you lot would like to do.

Instead of clicking on buttons, in the CLI you type what y'all would otherwise accept clicked on (though less verbosely). We are going to stick with the console for now.

Here you lot tin can view all of your S3 buckets. S3 is composed of buckets, and a bucket is basically a big container where we get to put files. Think of a saucepan as a drive on your computer (similar your C: drive). Information technology technically isn't – it'due south a method for routing in S3 – but for at present it's fine to see the bucket in this way.

Create Your S3 Bucket

Click on the Create saucepan. And then, enter a unique name for your saucepan (no spaces allowed).

The proper noun of your buckets must be globally unique. So, if yous try to create ane named exam , there is probably someone in the world who used that already then information technology won't be bachelor.

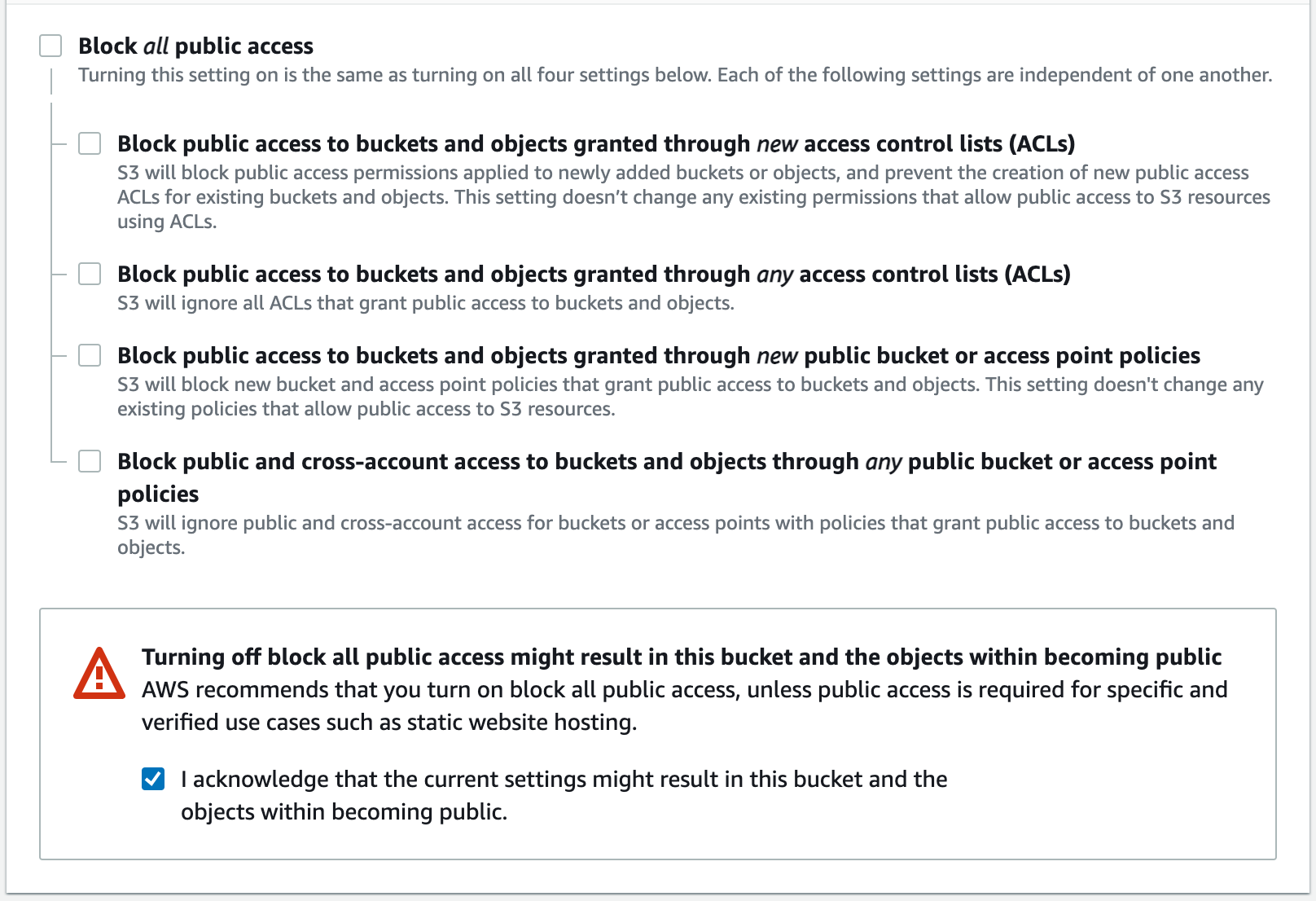

After inputting your saucepan'southward name, ringlet downward to the section called Cake Public Access settings for this bucket. We want to uncheck the Cake all public access checkbox, and so further downwardly bank check to acknowledge the alert box that appears.

Security and permissions in AWS is a lengthy topic, but, every bit you can see past the warning, you typically do non desire to unblock public access to your files.

In our instance, nosotros want our website'south visitors' web browsers to be able to access our index.html file and so that they can see our project.

There are other methods we could use to unblock admission to our bucket'south content, simply for now this is sufficient for our goal of introducing you to S3 and deploying a project on AWS.

Roll down to the lesser of the form. Click the Create bucket button at the bottom. If your name was unique, y'all merely made your get-go AWS S3 bucket, congrats!

It's pretty amazing to stop and think that in that short amount of time, AWS just made space available for you in their network to store an unlimited amount of data. That's correct, unlimited!

Although the maximum size for a single object in your bucket is 5 terabytes (skilful luck hit that), your new bucket tin hold as much as yous want in it.

Upload Your Files to Your Bucket

Side by side, back in the S3 console view, click on the link to your newly created S3 bucket. Once within, we desire to click on the Upload button. Select your three files (index.html, styles.css, and main.js).

Later y'all meet their names show upwardly in the list of items about to exist uploaded, curl down to the bottom of the upload course.

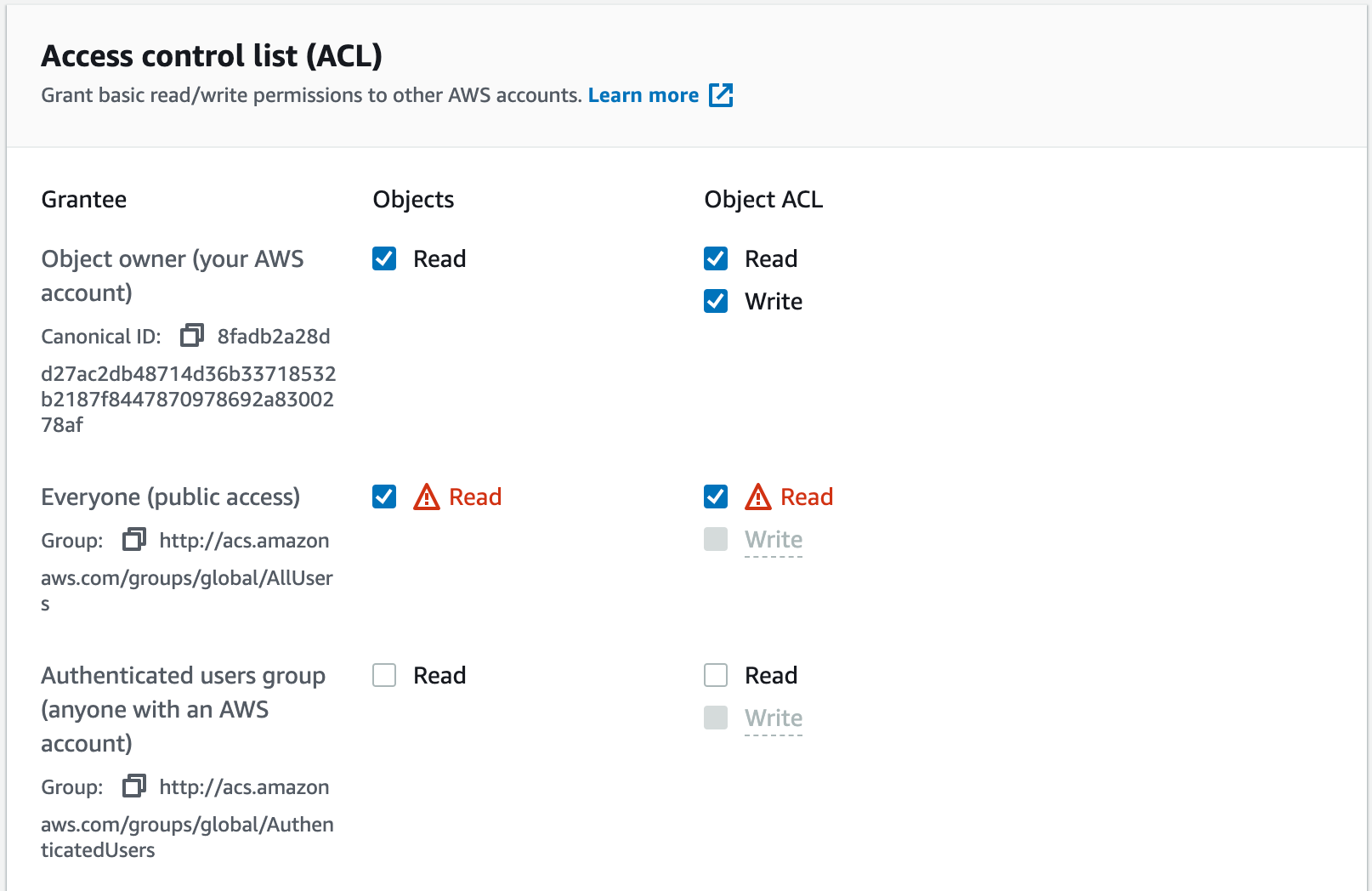

Expand the Additional upload options, and so roll downwards to the Admission control list (ACL). Bank check the Read boxes for Anybody (public access), and and then check the acknowledgement box that appears underneath, only like when we created our bucket.

Curl downward the rest of the way and click the Upload push.

Enable Hosting on the S3 Bucket

At present we have our S3 bucket, we accept our files, we have them publicly accessible, but nosotros're not quite done.

S3 buckets past default are not configured to be treated like web servers. Most of the time companies want the contents of S3 buckets kept private (like hospitals storing medical records, or banks saving financial statements). To make the bucket deed similar a spider web server, providing us with a URL to access our files, we need to arrange a setting.

Dorsum at your bucket's main menu view, click on the Properties tab.

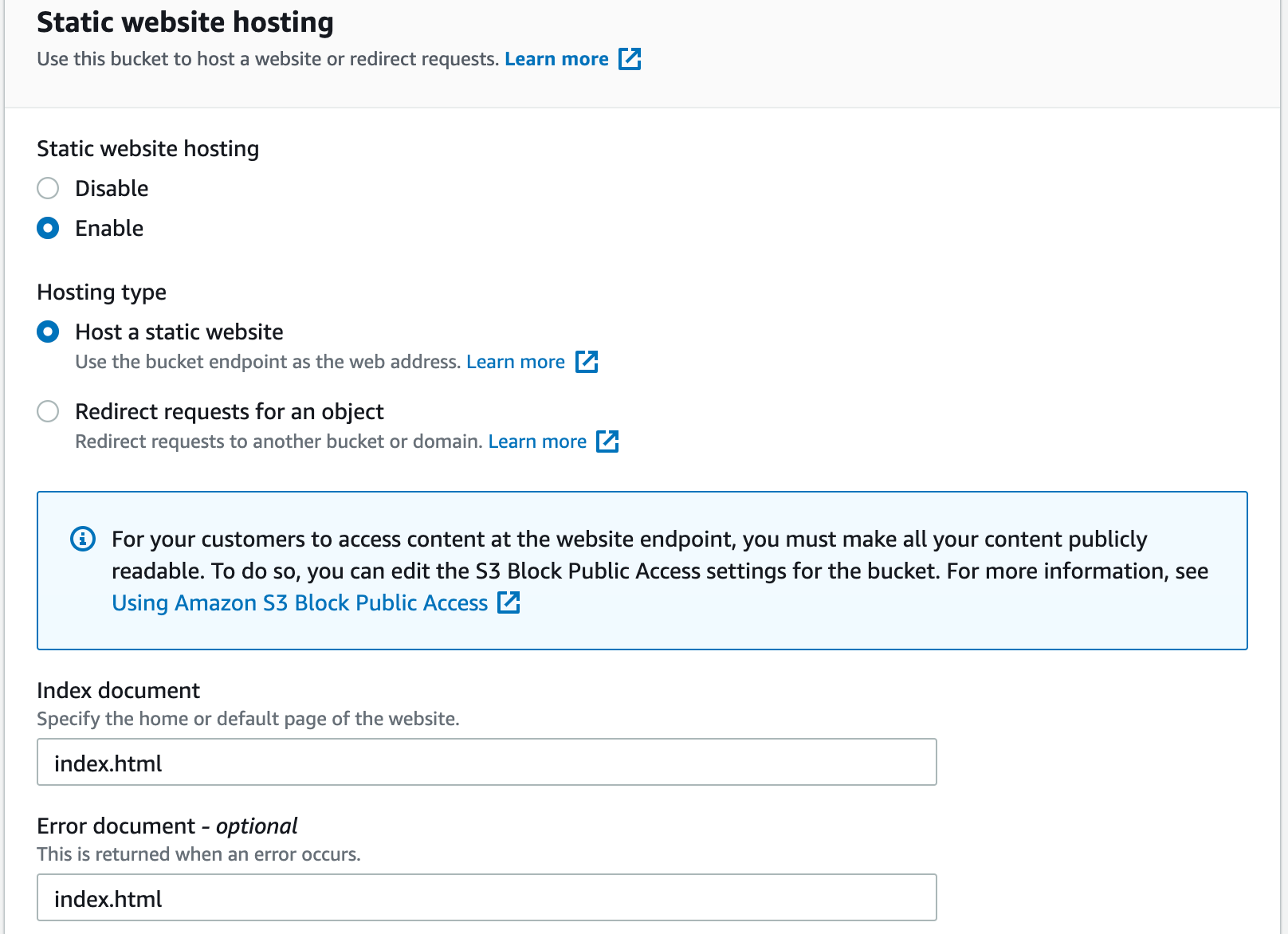

Scroll downwardly to the bottom until you find Static website hosting, and click the Edit push. Set Static website hosting to Enable, and and so in the Index document and Error certificate type index.html . So click the Save changes button.

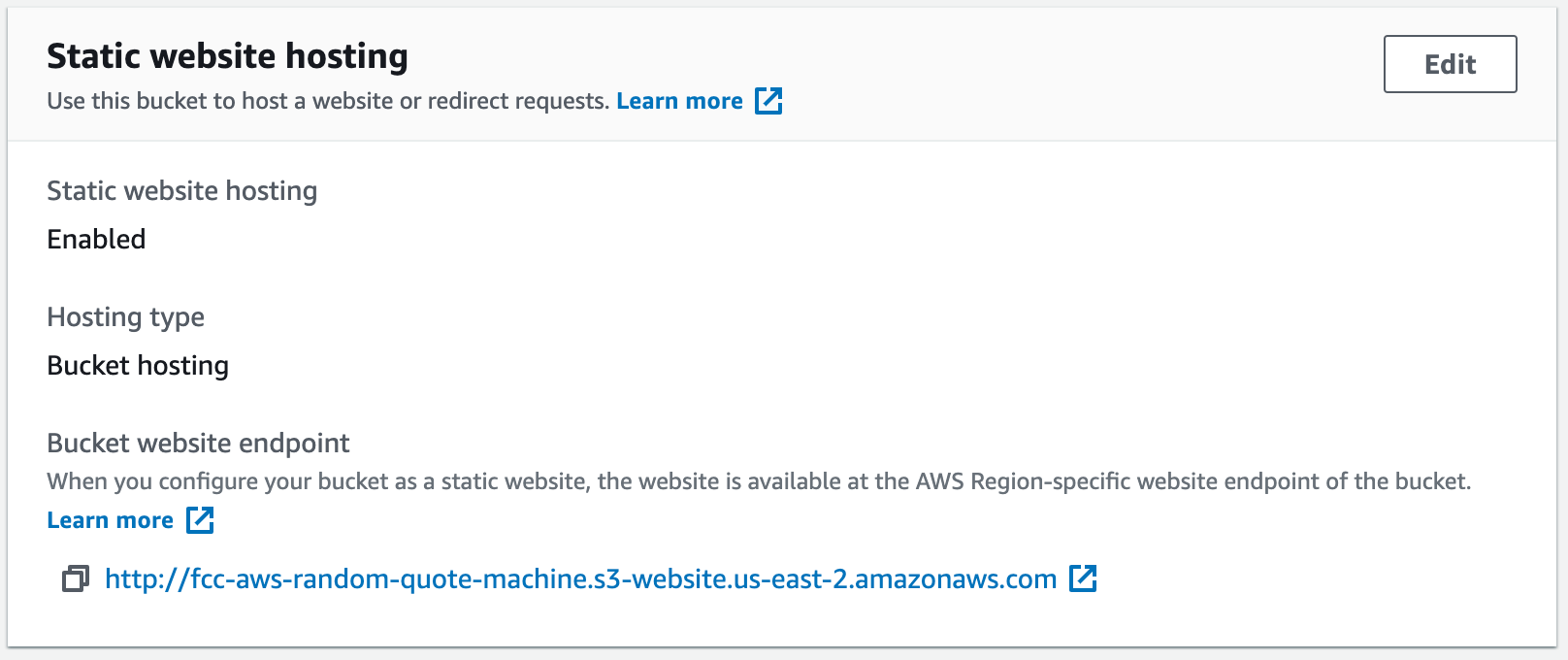

Yous'll return to the Properties tab of your bucket. Curlicue back downwards to the Static website hosting department, and in that location you'll find a link.

Reading that URL you'll notice the name of your bucket is in it. By typing alphabetize.html in the 2 fields during the configuration, we told AWS that when this saucepan's URL is opened to use the index.html page to load.

If y'all click on that link, your project should at present be viewable.

Troubleshooting

If your site worked locally, but is not working when yous open the S3 website endpoint link, there are a few common issues to try and resolve.

Outset, make certain you selected the same files that worked locally. Reopen the local files in a web browser to make sure they work. If it works locally but not through S3, try re-uploading them and ensure you select the same files.

Next, go to your bucket's Permissions tab and make certain you have the Block all public access fix to Off.

Lastly, delete your files yous uploaded, and re-upload them, ensuring that you select the Read checkboxes described above, also equally the acknowledgement box.

If you are however having problems, experience gratuitous to comment with your issue and I'll be happy to aid. Don't become likewise discouraged either. AWS tin can take a while to learn and then become easy on yourself if things aren't falling into place the first time.

You lot Did It!

At present, instead of sharing a CodePen URL to your friends and family, you have your very own S3 bucket website endpoint to share.

Granted, it's still non your own personalized domain, but hey, information technology'due south withal cool to know you lot just deployed a website the same way thousands of businesses do.

You've now not but learned the front skills associated with freeCodeCamp's Front Terminate Libraries Projects, only you also took your first steps into cloud computing with a deployment of a website. You should be very proud!

More on S3

Configuring an S3 bucket to be a website endpoint is just one of many ways to use S3. We could too use S3 to store information that we want to pull into an awarding. For case, the quotes on our Random Quote Machine could be stored in S3 as a JSON file, and and so our front finish could asking them.

That might seem similar an odd adjustment to brand when they could just exist listed in our principal.js file. But if we had other apps that needed access to those quotes so S3 could act every bit a primal repository for them.

This is in fact the virtually popular mode to use S3, as a data store for applications. We could likewise use the Glacier option of S3 to archive objects that we don't anticipate accessing oftentimes, which would save usa coin from the standard S3 bucket configuration.

One last idea, though non the last way to apply S3, is that we could salvage logs from a running application to an S3 bucket then that if in that location was a bug we could audit those logs to help place the source of the trouble.

Whatever the use example, the concept of S3 is the same: we are storing objects in a bucket and those objects accept permission settings to make up one's mind who can view, edit, or delete them (remember, we set our files to be publicly readable).

Next Up!

As stated earlier, AWS has hundreds of services, which means there are sometimes multiple ways to do a chore. We walked through one manner to host a site, simply there are two more worth noting that will help you lot proceeds more exposure to AWS as a whole.

How to Deploy your freeCodeCamp Projection with AWS Elastic Beanstalk

Now we're going to learn more nigh the AWS cloud platform services and encounter an alternative way to deploy your code so yous tin can experience more that AWS has to offer.

Specifically, we are going to get an introduction into several of the core AWS services and resource: EC2s, Load Balancers, Auto Scaling Groups, and Security Groups.

Wow, that'due south a lot of topics to comprehend. In the first part above, we just looked at S3 – how are nosotros going to larn about all these other services?

Well, thankfully AWS offers a service called Rubberband Beanstalk which bootstraps the deployment process for web applications. By deploying your freeCodeCamp projection via Rubberband Beanstalk you tin can quickly gain feel with these core AWS services and resource.

Earlier Nosotros Jump Dorsum into AWS

We discussed in Office ane that to "deploy" code means to configure a computer in a mode to serve the projection and make it accessible to visitors. The nice thing about cloud computing platforms is that the configuration of that server can be washed for us if we desire.

Consider our S3 deployment for a second. Our files were stored on a machine owned by AWS, and AWS took care of configuring that calculator to serve the index.html folio and give us an endpoint for viewing the project.

Aside from telling S3 the name of our index.html file, we did none of that configuration and yet we still walked away with a deployed projection and an endpoint to view it.

When we use Elastic Beanstalk, it will similarly include AWS handling a lot of configuration for us. Simply this fourth dimension we are also going to practice a flake of configuring ourselves.

By doing this, nosotros will see an alternative and very popular method for deploying a web application. This time, nosotros volition add lawmaking that "serves" the alphabetize.html but nosotros'll let AWS handle the launching of the hardware and giving us an endpoint to view our project.

Let'south Configure!

Nosotros are going to add together some code to our projection to serve our index.html. Call up, S3 did this behind the scenes for us.

I have created a Git repository for you to clone or download and utilize for this tutorial. If you're familiar with Git, then feel gratuitous to clone the repo and jump alee to Paste Your Code. But if yous're non familiar with Git, and so follow the instructions for downloading this repo.

Download the Repo

- Become to https://github.com/dalumiller/fcc-to-aws-part2

- Click on the light-green Code dropdown menu and so select Download Zip

- Unzip/extract the files from the zipped download

Paste Your Code

In the newly downloaded/cloned folder (which I'll refer to equally fcc-to-aws-part2 folder), we want to paste your index.html, main.js, and styles.css files that we uploaded to S3.

In the fcc-to-aws-part2 folder, in that location is another folder named public which has empty index.html, chief.js, and styles.css files. Go ahead and paste your lawmaking into those.

After pasting, let's confirm everything is working and so far.

Open a new browser tab, and then blazon ctrl+o and navigate to fcc-to-aws-part2/public/alphabetize.html. Open index.html in that new tab. Your app should be running now.

If it is not, stop and make sure you pasted the correct lawmaking into the correct .html, .js, and .css files. Likewise, make sure you're using that same code that worked during your S3 deployment.

With that working, we are now fix to discuss what this extra stuff is in the fcc-to-aws-part2 binder. Make certain y'all do non make any changes to any files other than the alphabetize.html, main.js, and styles.css.

Configuration with Node.js & Express.js

Remember I said we were going to practise some configuration this time? Node.js (aka Node) and Express.js are what we're using to reach that configuration.

freeCodeCamp has a tutorial on Node and Express, then if you'd similar to suspension and go through that feel complimentary. But I'll as well provide a cursory introduction explaining why and how we are using Node and Express.

Why Use Node?

Node.js is a JavaScript runtime for server-side applications. That is a lot of jargon, and so let'south break it down a chip.

"Node is a JavaScript runtime" – a runtime, in the world of programming, is an execution model. In other words, it is a procedure that implements how to execute lawmaking. And so, Node, is a procedure that implements how to execute JavaScript code.

When we accept JavaScript code like this:

console.log("Hullo World") Node knows what to do with that code.

"…for server-side applications"- think that we are trying to deploy our code to exist served by a server, and that a server is just a reckoner. Too, note that a server is different from a client, and in the case of a website the customer is the web browser.

When you open up your project in a web browser, the browser handles reading the index.html, main.js, and styles.css files. That's right, the browser (customer) knows how to read and execute JavaScript lawmaking, so that when it sees console.log("Hello World") it knows what to practise.

Our primary.js file is JavaScript lawmaking that is existence run client-side, by the browser. But, Node is for server-side JavaScript. Then, your browser knows how to run that JavaScript code, but how does the computer that serves our website know how to run JavaScript? Node.

So, all together, "Node is a JavaScript runtime for server-side applications" means that Node is a process for implementing how to execute JavaScript lawmaking on a server, as opposed to a client like a spider web browser.

Without Node, the estimator/server does not know how to run JavaScript code.

Why Utilize Express?

While Node gives usa the server-side runtime, which is that environment where we can run JavaScript lawmaking, Limited gives us a framework for serving web applications.

When we deployed via S3, nosotros told S3 the file name we wanted served (index.html). Now, Express volition be where we configure what file we want served.

If we had a website with different routes (ie: www.example.com/dwelling house, /media, /about, /contact), then we might have dissimilar HTML files for each of those pages. We would use Limited to manage serving those pages if the web browser requested them.

For example, when the web browser requested world wide web.example.com/contact, Limited would get that request from the browser and answer with contact.html.

app.js

At present we know that Node is what lets u.s.a. run JavaScript code on the server and Express is handling our requests from the browser. Then let'southward wait in fcc-to-aws-part2 at our app.js file and read it line past line to understand what nosotros've added to our projection.

First…

const express = crave("express"); This declares a variable called express, and then the = sign ways we are assigning it a value. But what's this require("express")?

The require function is just a part Node is aware of, and when Node sees it then Node will look for a binder in the node_modules folder by the same name. You have this node_modules folder in your fcc-to-aws-part2 folder.

Once Node finds express inside the node_modules binder, information technology will import the code from the limited folder and assign it to the variable we declared. This lets united states utilise the code that comes from the limited binder without having to write it ourselves. This importing lawmaking into our code is the concept of modules.

A module is just a parcel of code. It could be one line long or much longer. Therefore, a NODE_module (emphasis added to node) is a bundle of code that tin run in the Node runtime. We are using, or require-ing, the limited module.

The express module is the Express.js web application framework we discussed earlier.

I won't got into how to add modules to the node_modules folder, but for now know that by declaring the variable express and using the require("express") syntax, we are bringing in that Express.js framework and making information technology accessible via the variable we assigned it to.

There was a lot packed into those previous four paragraphs, I recommend slowing down and reading it once more to brand sure you lot really got it!

Next upwards…

const path = require("path"); Ah! This should look familiar now. Nosotros are bringing in another module, this time the path module. We're assigning information technology to the path variable.

You might have noticed a pattern that the variable name we use matches the module proper name. It doesn't accept to be that way. We could assign the path module to the foobar variable. But it makes more sense to proper name it what it is.

What does the path module do? It is a module (just a package of code) that lets usa work with file and folder paths. This way we can apply a JavaScript syntax that Node understands in club to access files/folders on our server. That will come in handy when we want to reference where alphabetize.html is located in our project.

Alright, moving on…

const app = limited(); Express, once again? That'southward right. The first time we were but importing the module, now nosotros are using it.

To utilize the Limited framework we take to instantiate information technology, meaning nosotros accept to run this express office, express(), and now we're assigning it to the app variable.

"Whoa, but Luke, why not have this line of code correct after the earlier express line of code?"

Good question. It is a common pattern to import (or utilize crave()) all the modules you'll demand at the peak of your code, then utilise them after y'all're washed importing.

At present for the large banana…

app.apply(express.static(path.bring together(__dirname, "public"))); Whoa there! Let's break this apart a footling.

Showtime, we are calling a function, the app.use() function. This is telling our Limited awarding that we want to Employ another function in our app. Makes sense.

The part we are telling Express that we want run is the limited.static() office existence called inside of the .utilise() role parameter. And then, app.utilise() is telling Express we want to use some code in the app, and specifically here we are wanting to USE express.static(path.bring together(__dirname, "public")).

Now, express.static() is an Limited function which nosotros tin use since it's part of the module that nosotros imported and assigned to the express variable.

The .static() part handles serving static files. I hope your ears perked up and eyes opened broad! I'll say information technology once again. The .static() office handles SERVING static files. Serving!

Retrieve, in this deployment approach we are handling a bit more of the configuration to SERVE our project. Here is the Express office that we are using to say, "I want to serve some static files". Static files means our index.html file.

And then, app.utilize() was maxim, "Hey, I want to run some code for this app inside my function parameter". Specifically nosotros desire to run limited.static() which says, "I want to deliver static files, like an index.html", and and so its function parameter tells us where to find those static files.

So let's wait at path.join(__dirname, "public") to understand how it's telling us where our static files are.

Earlier we imported the path module to be able to admission files in our server/estimator.

Well, we want to access the index.html file, which is the public folder. We use the path function .join() to say, "hey, from our current directory (or, folder), go to the public folder to find the files I want". That volition return the index.html, master.js, and styles.css to the express.static(), which will return to the app.use() role that is handling what files to serve (SERVE!) our visitors.

All together at present!

app.use() = "when the browser requests the app, I want to run limited.static()"

express.static() = "I am going to deliver some static files and they are located here…"

path.bring together(__dirname, "public") = "catch the files in the public binder"

Yay! Nosotros did information technology! Nosotros configured out Express app running in the Node runtime to deliver the alphabetize.html, main.js, and styles.css files when someone visits our site.

Simply wait…in that location's more than:

const port = 8080; app.listen(port); Remember that the app variable is our case of the Limited framework. The .heed() function is the app telling the figurer, "hey, whatever requests made on port 8080, bring them my way!"

Ports and sockets are a more advanced topic, so we won't get into it now. Merely merely know that a figurer/server has many ports, which are like admission points, and we are configuring Node/Express app to merely listen to one access point, 8080.

Lastly…

console.log("listening on port: " + port); This is standard practice for Express apps, where we are but logging out on the calculator what port we're running. Information technology gives us some verification that the Express awarding works.

Dandy chore! That was a lot of code to walk through.

Now, if you're familiar enough with how to navigate via a concluding, we can exam this Express app locally on our computer before moving on to AWS. Ensuring it works locally earlier we go to AWS volition help u.s. in case we have errors in AWS because nosotros'll know the errors can't exist related to our Limited app not working.

How to Test Our Node/Express.js App

If you lot open the package.json file, you'll see a "scripts" section. This packet.json file is metadata for our Node application, and it too can contain configurable commands to run in the "scripts" department.

I've included the "beginning" script, which runs the app.js file in the Node environment, which in turn runs our Express.js code we just discussed. This "get-go" script is how we run our project.

To exam this out, in your terminal navigate to our fcc-to-aws-part2 folder, enter npm start to start the app.

Later on running that command, you should immediately run into our console.log() message informing us that the app is running on port 8080.

At present, to view your projection, open a web browser and enter localhost:8080 in your address bar and click Enter. You should now see your project running!

If you lot don't, brand sure you are using the correct index.html, chief.js, and styles.css files, and that you didn't alter whatsoever of the lawmaking from fcc-to-aws-part2.

Woohoo!

Alright, now we have configured Node and Limited to serve our project. Our local computer is interim as the server at the moment, merely we want anyone in the globe to be able to view our project and they tin can't do that via our localhost:8080 accost.

So, nosotros are going to use AWS to host a server for us, put our app on it, then let AWS manage the configuration necessary to generate the URL endpoint for the world to access. That's where the AWS service chosen Rubberband Beanstalk comes into play. Let's get to it!

Go to AWS Elastic Beanstalk

Afterwards logging into your AWS account, search for the Elastic Beanstalk service in the peak search bar. Once there, click the Create Application push.

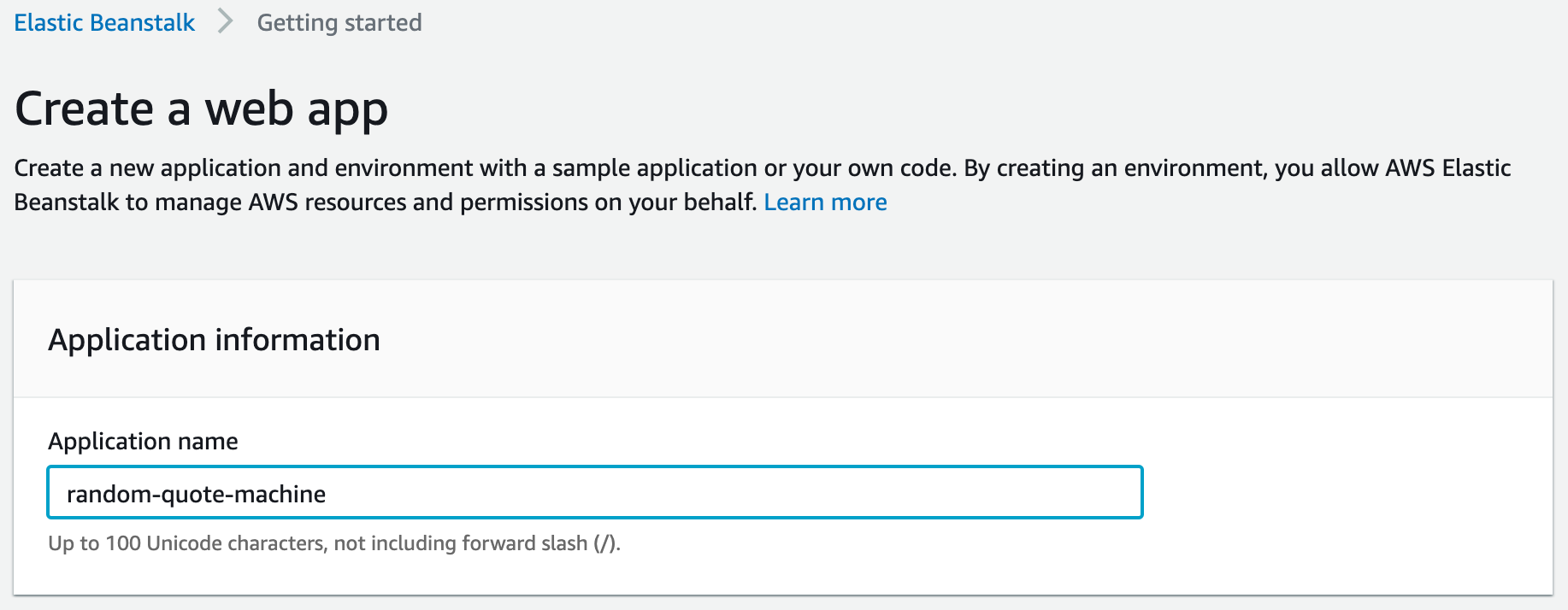

Next we'll add the proper name for our Elastic Beanstalk application.

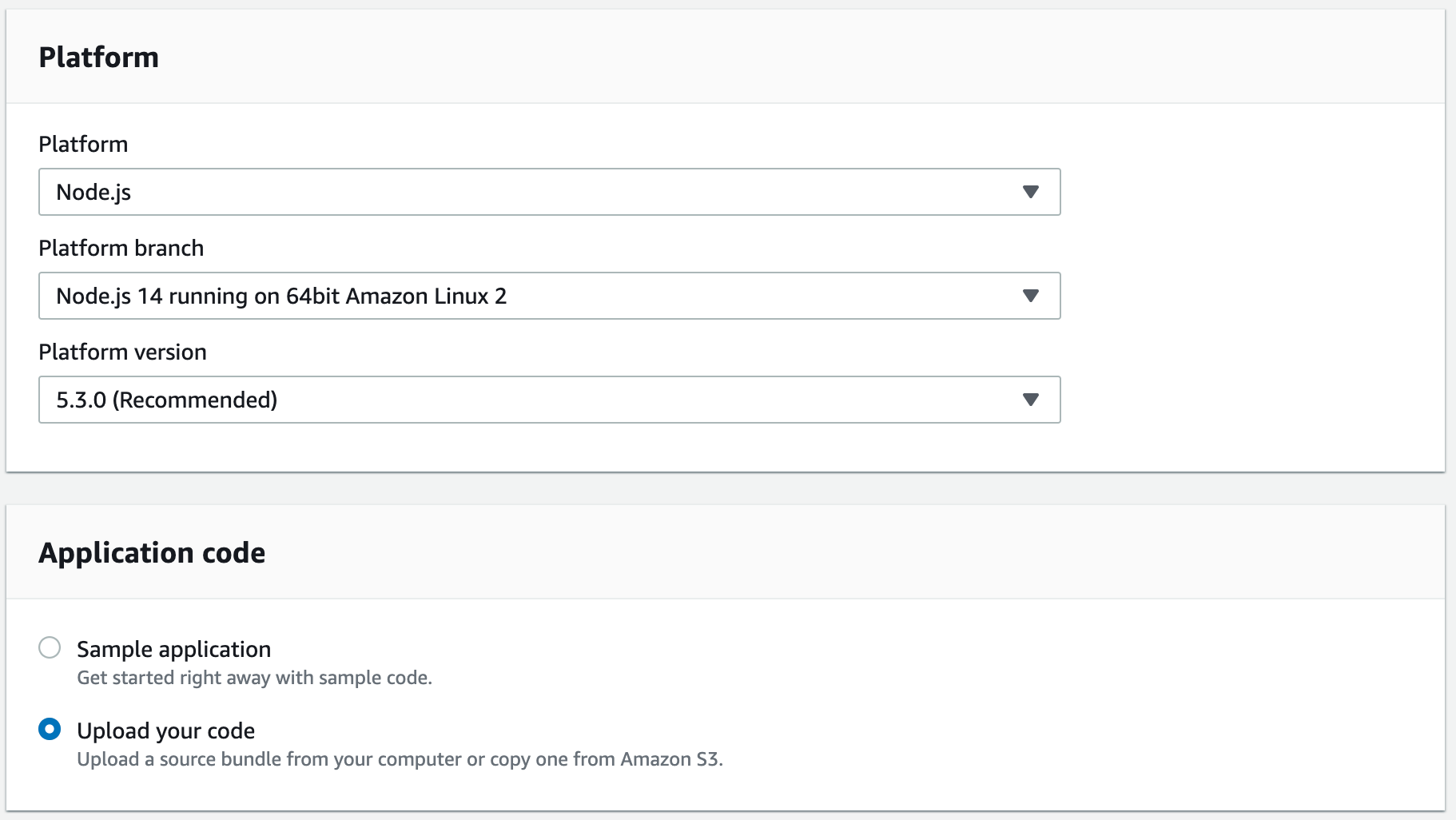

Skip the Tags section, and let's enter information for the Platform and Application Code sections.

Our Platform is Node.js, the Platform Branch is Node.js 14 running on 64bit Amazon Linux ii (that'southward telling united states of america what kind of server is running Node), and Platform Version is whatsoever AWS recommends. And then for Application code, we are going to upload our code.

Click the Create Application push.

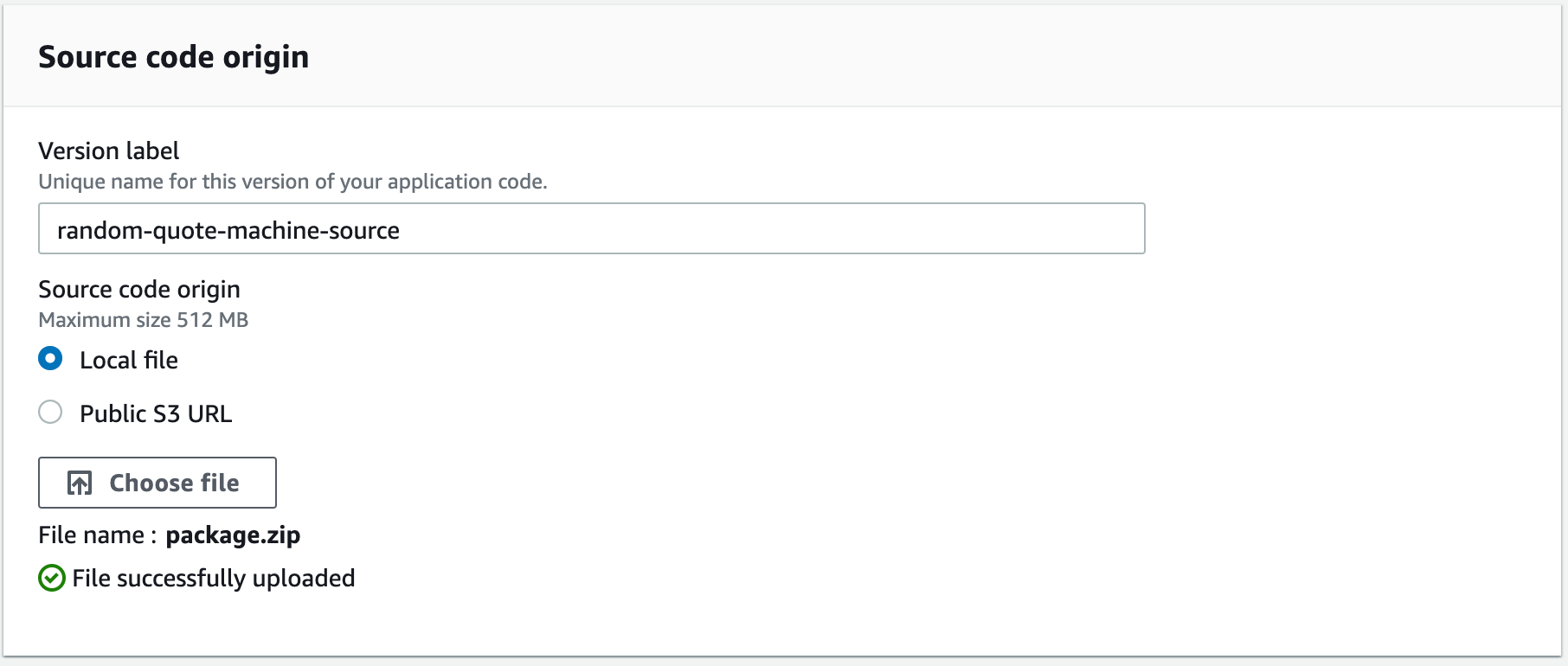

Upload And Deploy

Earlier we click the Upload and Deploy button, we need to zip our projection. Open up the fcc-to-aws-part2 folder, and select all of the files in information technology and zilch them upwardly. Do NOT zip the fcc-to-aws-part2 binder itself – that won't work.

Now, with your files zipped, click the Upload and Deploy push in your Elastic Beanstalk surroundings. Select your zipped file.

Subsequently uploading your lawmaking, a black box will appear giving us a serial of logs which are the steps existence taken to launch the Elastic Beanstalk application. Revel in the fact that with each log entry, that is a configuration we did non have to practice.

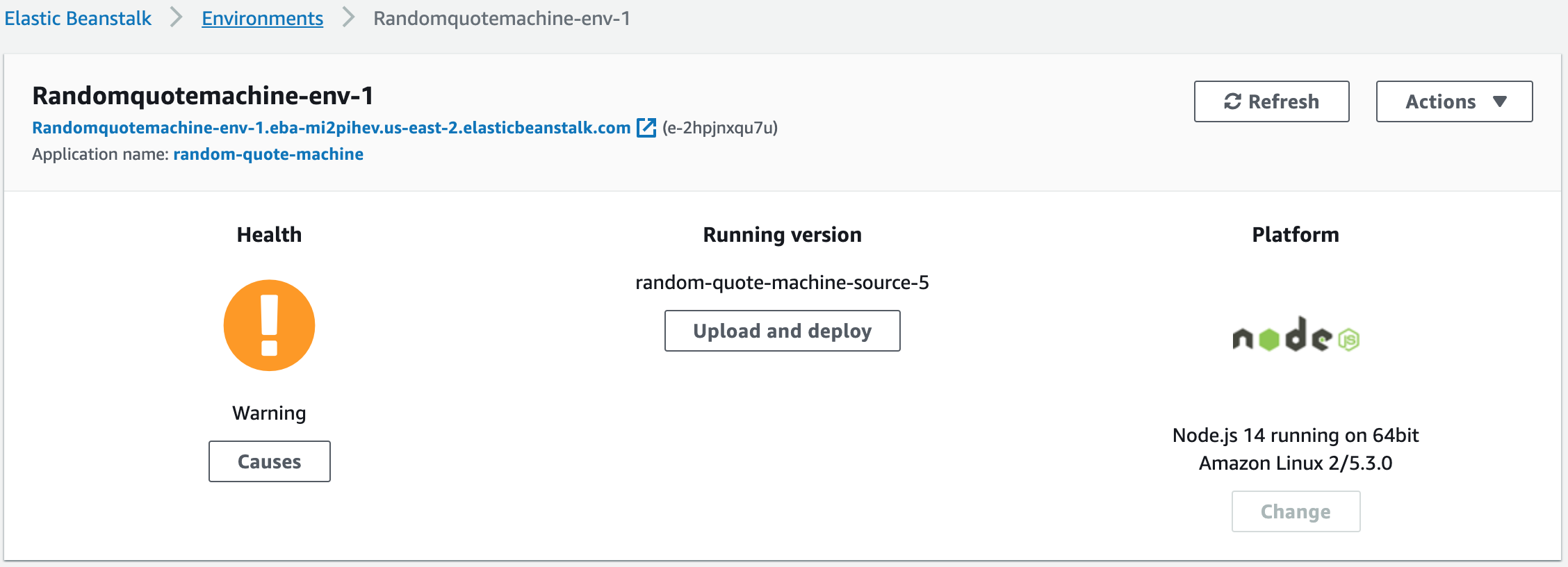

Once it finishes, you'll be navigated to the primary view of your app. You'll meet your environment has a Health condition that is probably cherry at the start.

Do not be alarmed by this. AWS is now going through the configuration process that we don't want to do, namely the launching of the server, the creation of the URL endpoint, and and then running our app. Information technology takes a few minutes for that to finish.

This is when Elastic Beanstalk is doing all of that configuration for the states that makes deject calculating platforms then handy!

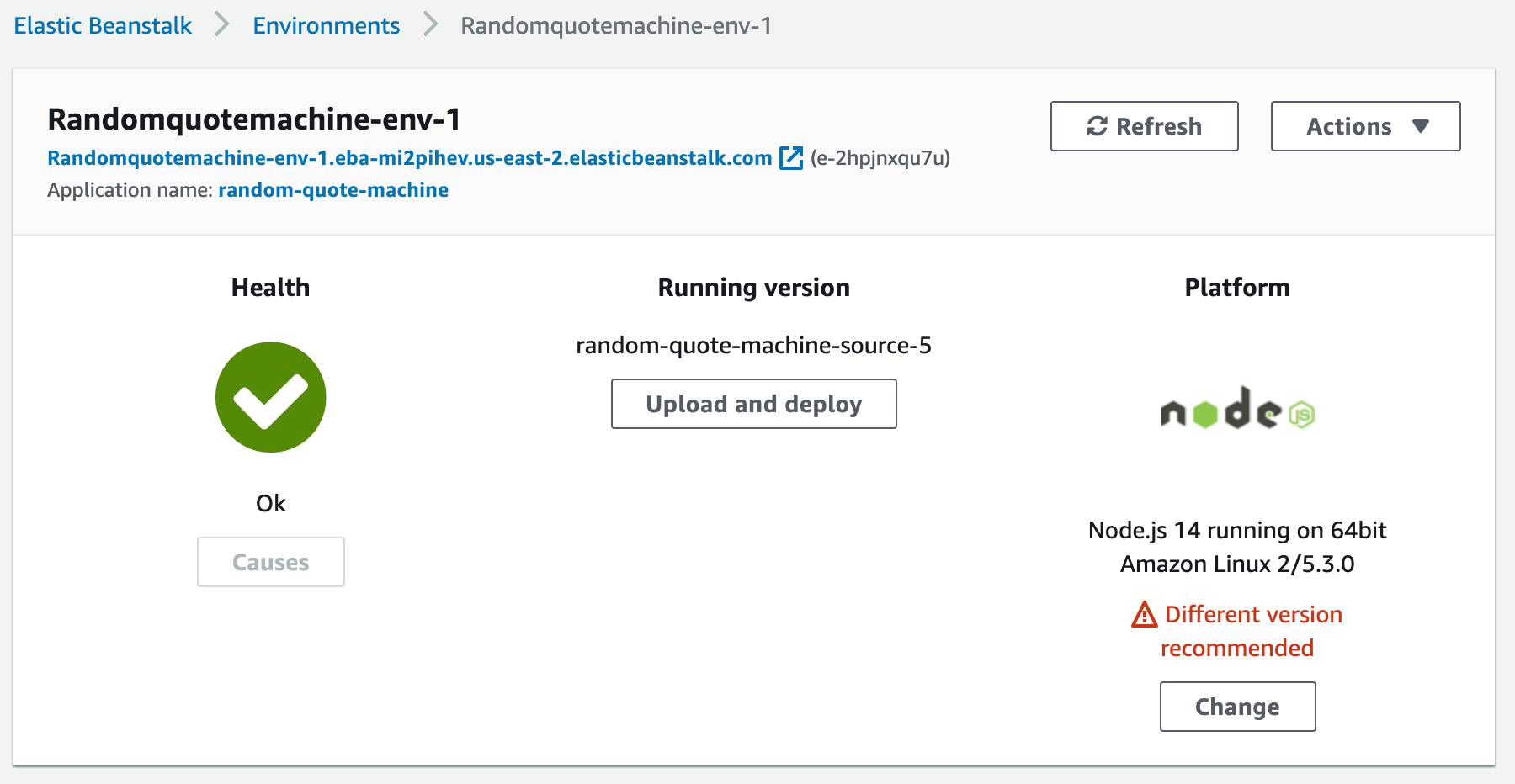

Detect at the top that you already have a URL endpoint given to yous. Once the Health improves you should be able to open that link to run across your projection.

If your health status never changes to orange and so light-green, then something is incorrect. The best thing to do is re-download the fcc-to-aws-part2, paste your lawmaking in, and then re-zip the contents of fcc-to-aws-part2, non the fcc-to-aws-part2 binder itself, and upload information technology again to Rubberband Beanstalk.

Once the wellness status improves, you should be able to open the link and see your project. More importantly, anyone in the world tin can now see your project with that link!

Y'all Did It!

Congratulations!

I know this was a longer and more detailed procedure than the S3 deployment, but you lot did it.

We took on doing some of the configuration ourselves this fourth dimension by creating a Node/Limited app to serve our static files, only we still let AWS handle creating and configuring the server, its environment, and the endpoint for usa to run our projection on and view.

Even with our time spent going through the Express lawmaking, the corporeality time information technology took to deploy this project is minimal since AWS is automating then much for us.

Nosotros didn't accept to take the fourth dimension to purchase a server, install programs onto it, fix a URL endpoint for it, or install our projection onto information technology. That'due south the benefit of deject computing platforms like AWS.

Find: Be certain to end or terminate your EC2 Instance, or delete your Elastic Beanstalk Application when yous're done with information technology. If you do not, y'all'50 be charged for notwithstanding long your application runs.

The Underlying Services of Rubberband Beanstalk

I mentioned at the start of this department that the beauty of Elastic Beanstalk is that by using this service we actually become an introduction to many services: EC2, Load Balancers, Motorcar Scaling Groups, and even Deject Watch.

Below is a brief explanation of those to further your learning near AWS and its services.

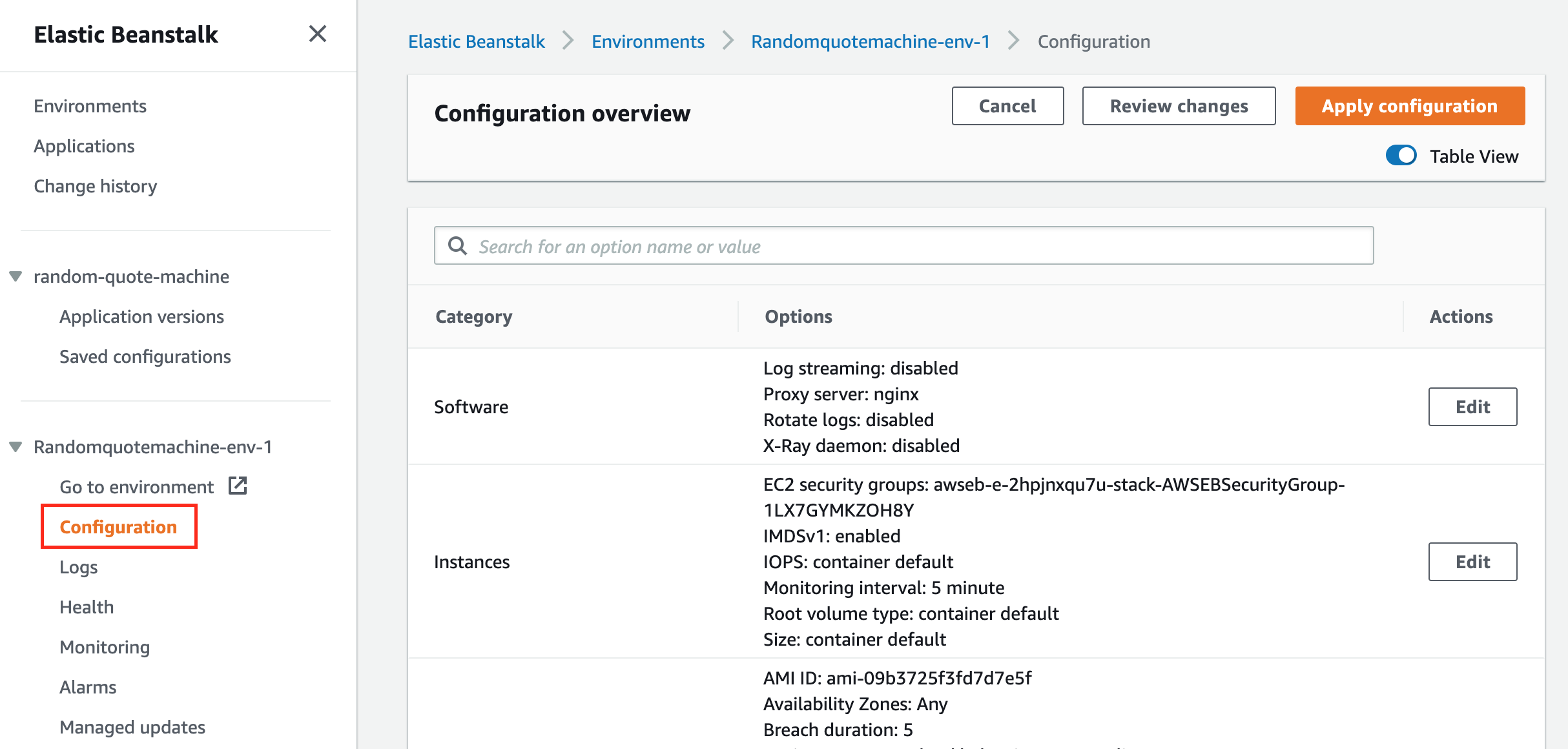

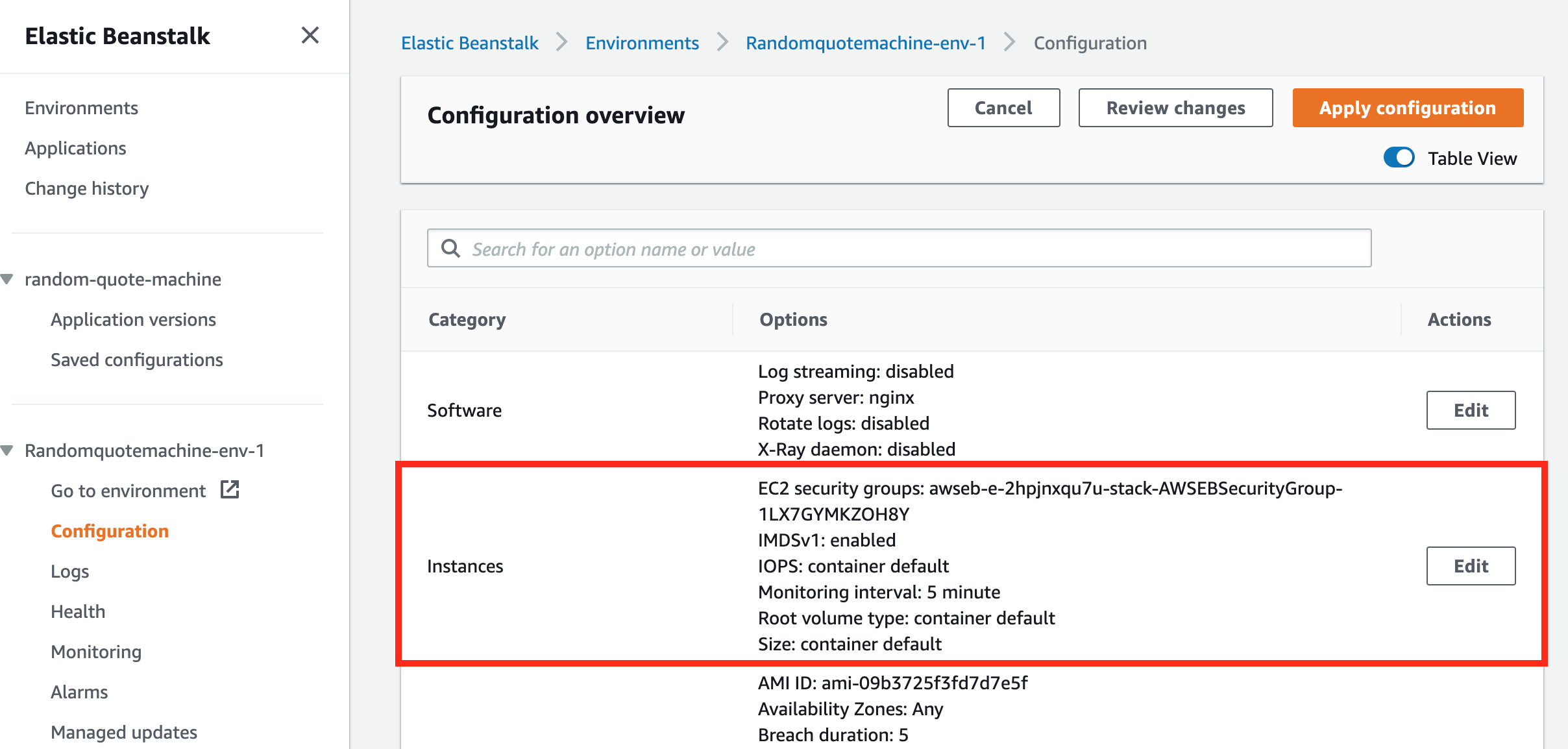

To get a better visual to come across some of the depth of configuration Elastic Beanstalk did for united states of america, navigate to the Configurations link and scroll through the page.

EC2

In the Configuration Overview at that place is a category called Instances.

Instances is another word used for server, or computer. Call back that we're deploying our code to a server, well AWS calls those servers Instances, and more specifically they're called EC2 Instances.

EC2, brusque for Rubberband Computer Container, is a service past AWS that lets yous launch EC2 Instances very quickly. Nosotros can "spin up" a server chop-chop using EC2 and put whatever nosotros want on it.

In our case, Elastic Beanstalk ran the EC2 service for us and started an EC2 Instance for our application to be hosted on.

If you notwithstanding take your Elastic Beanstalk app running we can go look at your EC2 Instance. At the top of your screen, in the AWS search bar, enter EC2 and click enter.

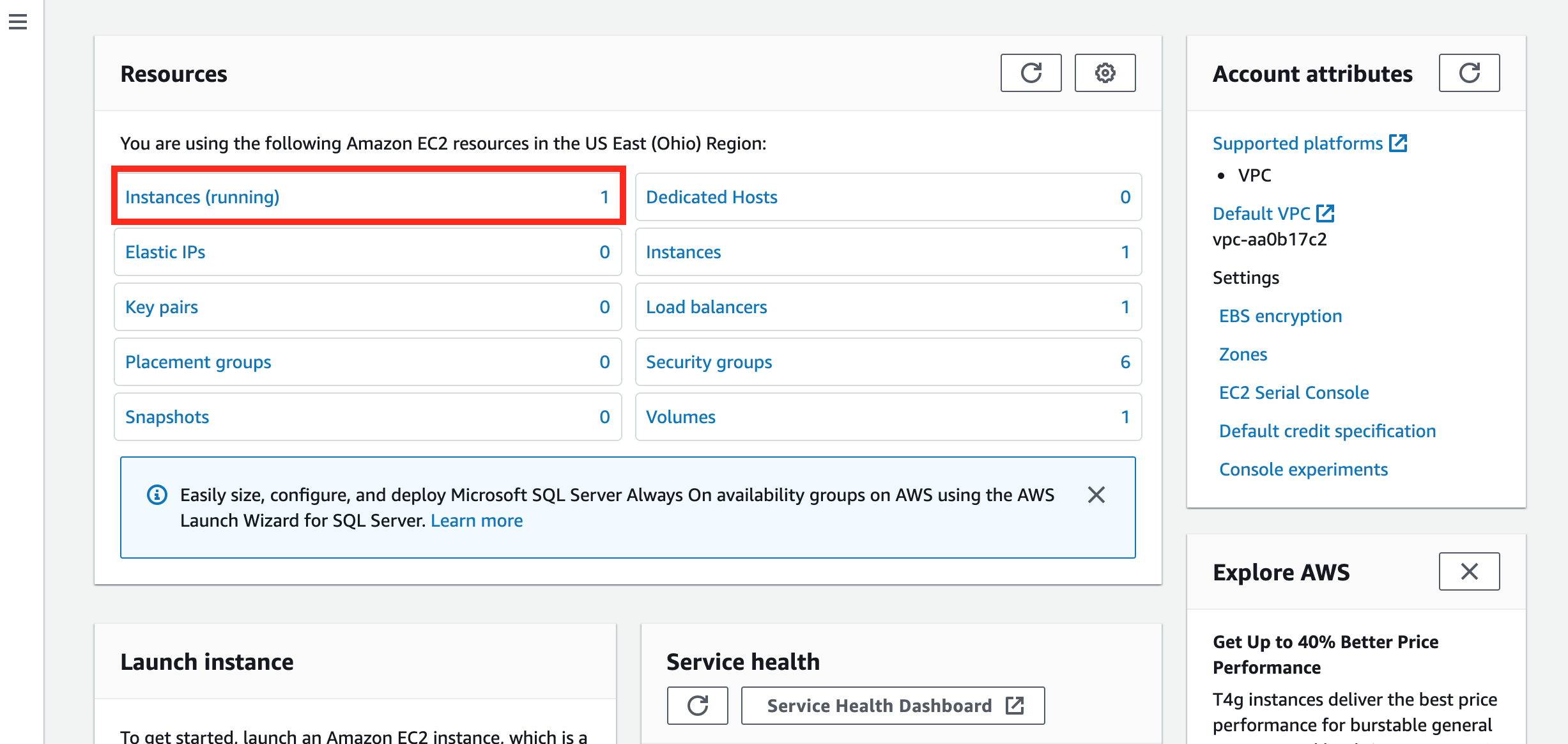

You should see that you have one instance running, similar this…

If you click on the Instances (running) link you'll get to look at the specifics of the EC2 example. This EC2, once more, is only a computer running in some AWS site that has your lawmaking on it. The EC2 was launched by Elastic Beanstalk for us.

Load Balancer

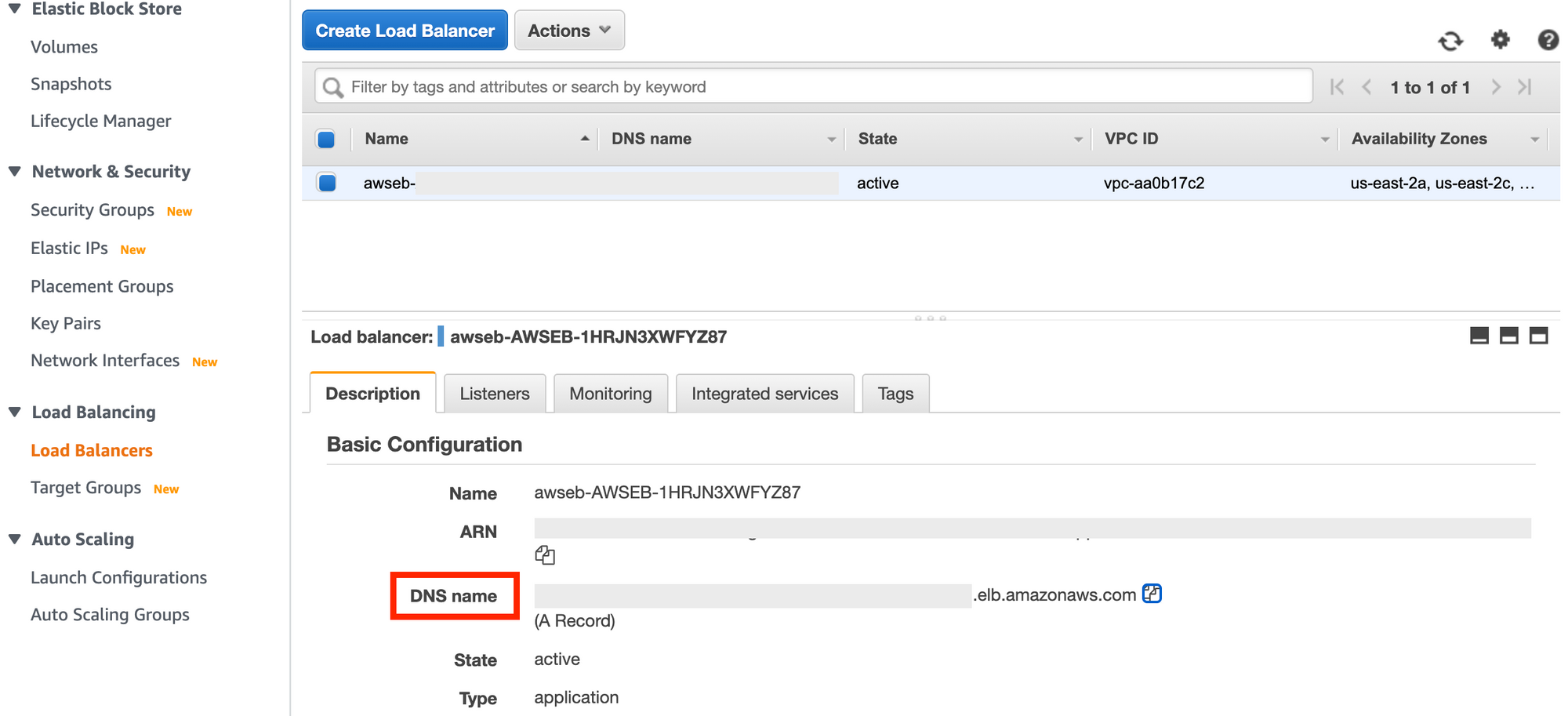

Not only did the Elastic Beanstalk create an EC2 Case for the states, it also created a Load Balancer for us. In the EC2 Direction Console, on the left navigation panel scroll downwardly to Load Balancers and click the link to run across the one fabricated for you lot.

A load balancer'due south purpose is to distribute incoming traffic beyond multiple targets. That target in our case is an EC2, simply we only accept 1 running, then a load balancer is not all that useful at the moment.

Allow'due south pretend for a moment that our app went viral and we had tens of thousands of people trying to access the endpoint Elastic Beanstalk gave the states. That single EC2 Instance would be overwhelmed with traffic. Requests would timeout, visitors would get frustrated, and our site would suffer since we don't have plenty EC2s to handle the load.

Just! If nosotros DID have more EC2 Instances running, each with our project deployed on it, nosotros'd be able to handle going viral.

Though in that location is a problem that arises from having mulitple EC2s. We need each EC2 instance to be reachable by that same Elastic Beanstalk endpoint, and that's tricky networking.

That'due south where the Load Balancer comes in. It provides a single access signal for our Rubberband Beanstalk to target, and the Load Balancer then handles keeping traffic organized between the different EC2s and the Beanstalk.

If yous wait at your Load Balancers Basic Configuration, you'll see a DNS Name, which looks a lot like the Elastic Beanstalk endpoint. If you open it, your app should run. That'southward because the Rubberband Beanstalk endpoint really points to this endpoint which is able to balance traffic amidst multiple endpoints.

At present, you might exist wondering "Luke, how exercise I get more than EC2s to launch so that I can capitalize on this sweetness load balancing action?" Glad you asked! The reply is Auto Scaling Groups!

Motorcar Scaling Grouping

As the proper noun suggests, this AWS service automatically scales a group of EC2 Instances based on a ready of criteria. We currently take only one EC2 Instance running that our Load Balancer targets, merely an Auto Scaling Group has configurable thresholds to determine when to add or remove instances.

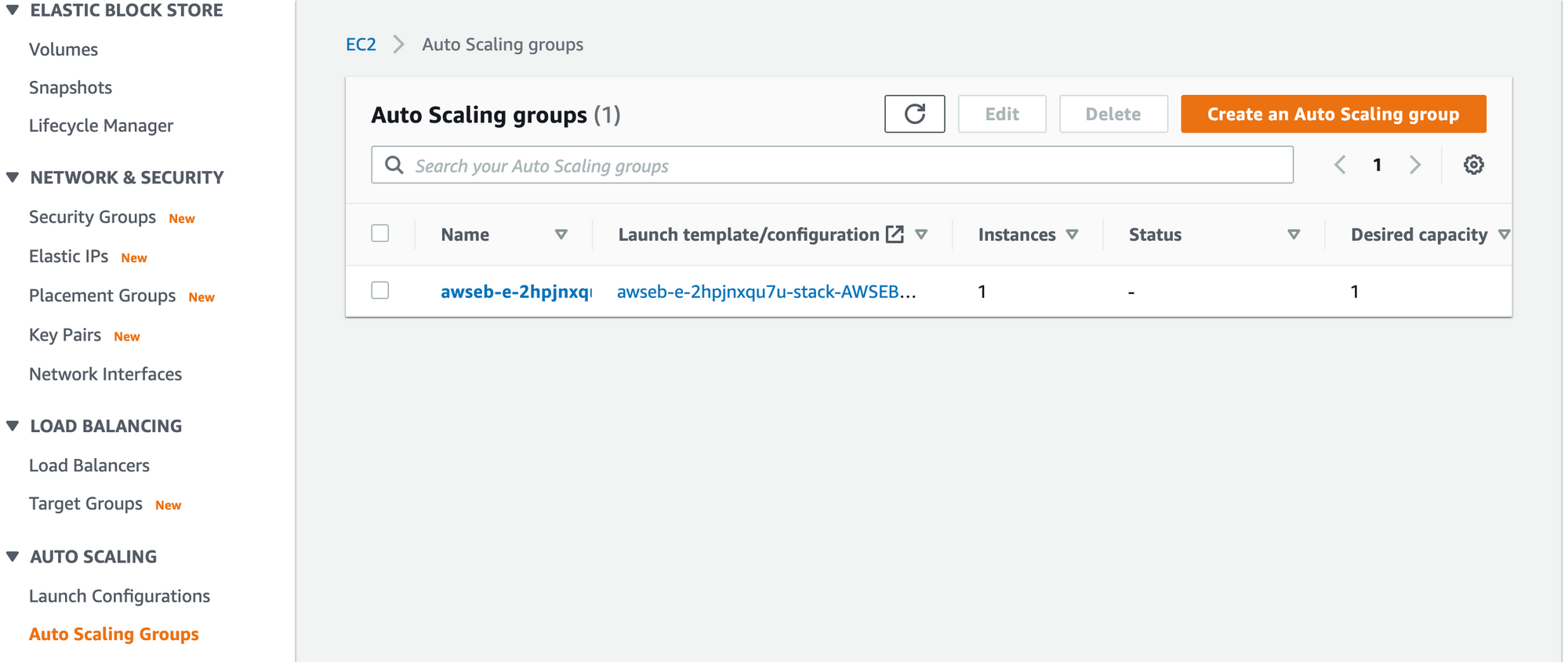

To run across your Auto Scaling Group, in your EC2 Mangement Console'south left-side navigation curlicue downwards towards the bottom until you find Auto Scaling Group and click on the checkbox next to the unmarried Auto Scaling Group listed.

Feel free to peruse the diverse details found in the tabs, but I desire to bespeak out a few details.

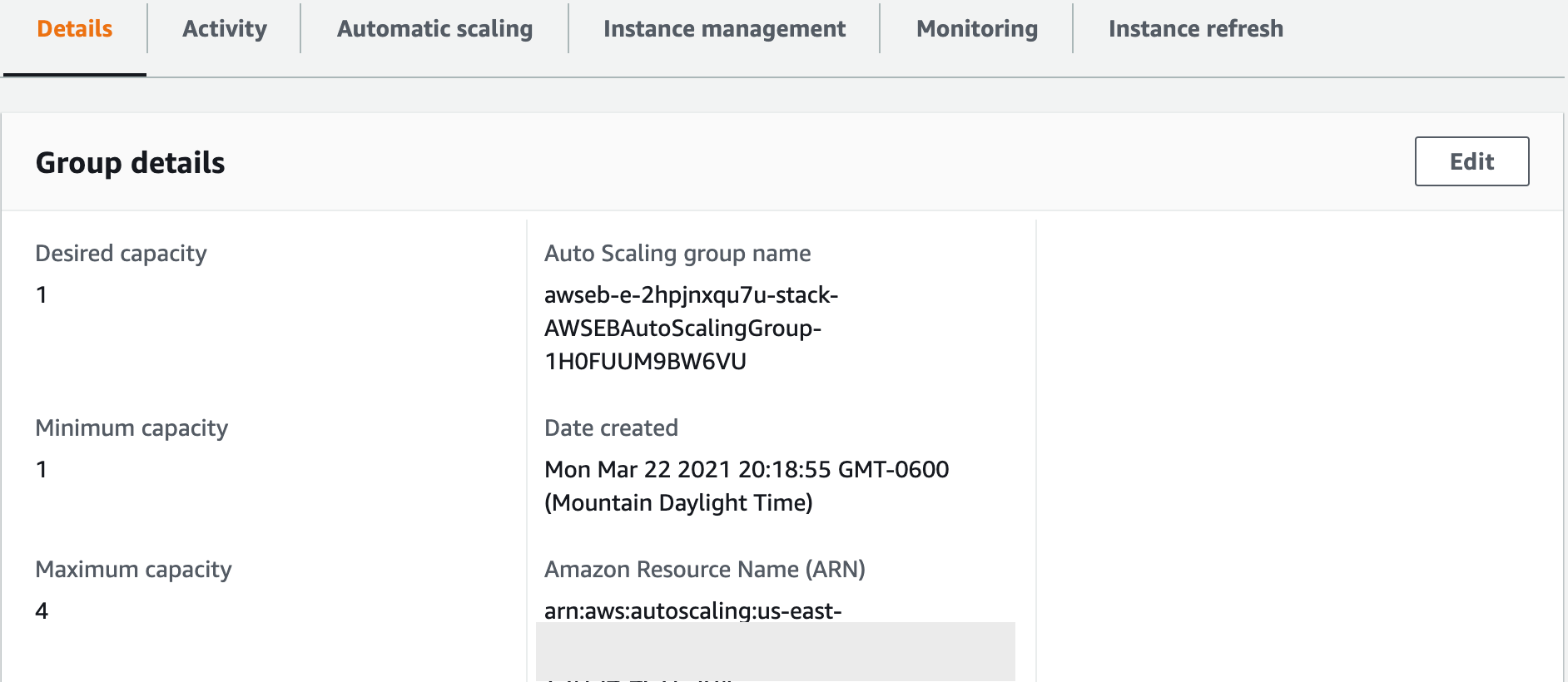

Get-go, click on the Details tab, and look at the Desired, Minimum, Maximum Capacity settings. It should default to 1, 1, and 4 respectively. These values are configurable and they're our style of telling AWS how many EC2 Instances we want running.

Since EC2 Instances cost money to run, companies want to fine melody how often new ones are added or removed. Ours says that we only want one running, minimum of 1 instance running, and four at maximum. If you lot edit the desired value to ii you'll see that a new instance will launch.

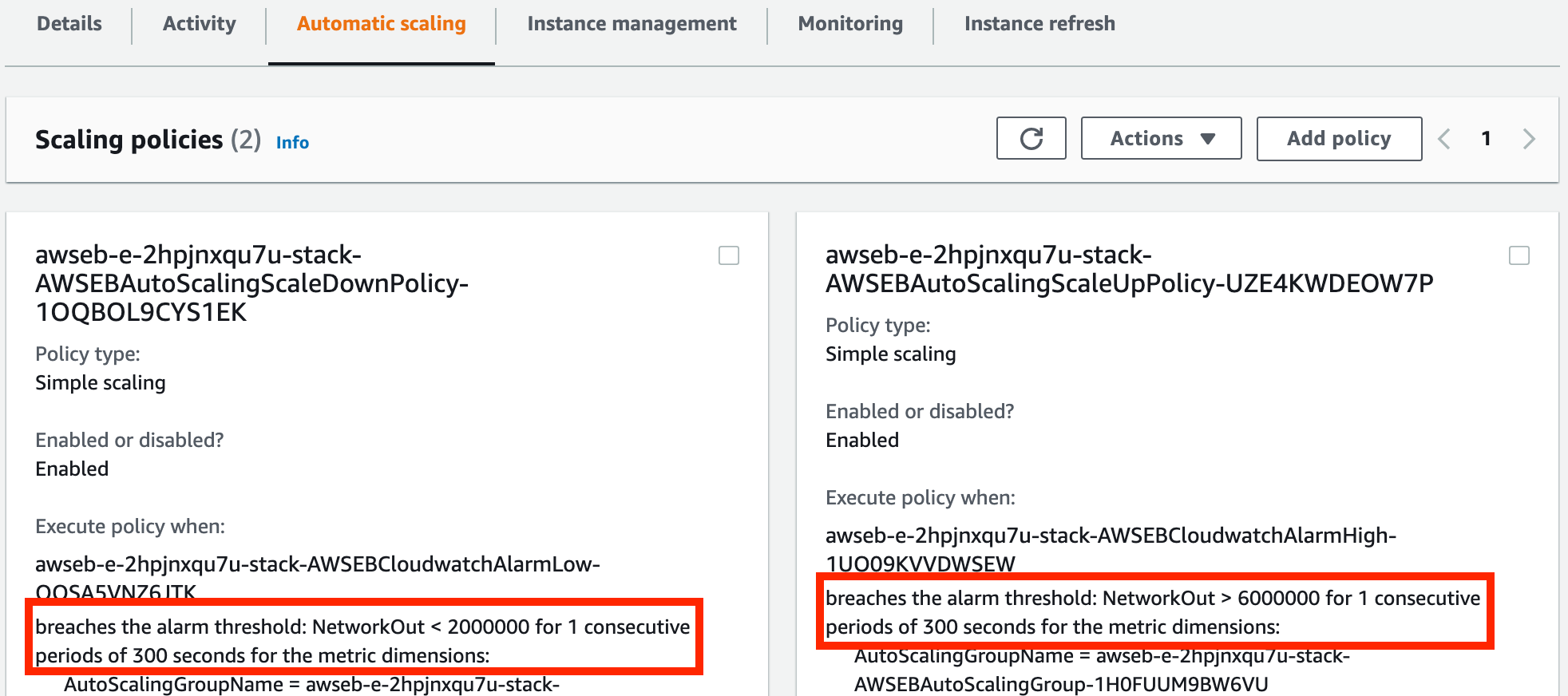

Merely how does Auto Scaling Group know when to add/remove instances? Click on the Automatic Scaling tab to see our current configuration for determining when to add/remove instances.

Here we have two policies: i for scaling in and 1 for scaling out. I've highlighted the current threshold that needs to be hit in society for either to be triggered.

What this says is "if there is a network issue on an EC2 Instance for more than one 5 minute period, add together an EC2 if we oasis't hit our maximum capacity" and "if in that location are no network problems for five minutes, remove an EC2 if nosotros haven't already hit our desired chapters."

And merely like that, our awarding tin can grow/reduce based on our configurations of the Motorcar Scaling Group. Our awarding can now handle the additional load of going viral!

But, ane final question: how does the Automobile Scaling Group know about our EC2 network errors? Enter, CloudWatch.

CloudWatch

If yous click on the Monitoring tab in your Auto Scaling Grouping, y'all'll meet links that will have you to CloudWatch. Once in the CloudWatch Management Panel, you lot'll run into a variety of AWS services and resources listed and their associated metrics.

Browsing through the listing yous'll find EC2 and Auto Scaling Group, and monitoring details for each. Where did all of these metrics come from? AWS provides them for your automatically, along with this helpful dashboard, allowing usa to do some pretty cool dynamic networking and programming based on individual metrics or a composite, such every bit Motorcar Scaling.

The Automobile Scaling Group nosotros have has access to these metrics, and watches the NetworkOut metric of our EC2 Instances to decide if a scaling in/out activity should occur.

Just another case of the leverage we gain from a cloud computing platform.

ElasticBeanstalk Deployment Recap

We took a look at a second method for deploying an application: Elastic Beanstalk. Compared to our S3 deployment, this road required us to add more lawmaking for configuring the serving of our index.html.

We used Limited.js, a Node.js web application framework, to serve our front, and so uploaded our newly updated project to Rubberband Beanstalk. This in plough launched a myriad of AWS resource and services for us.

We learned about EC2, Load Balancer, Auto Scaling Grouping, and CloudWatch, and how they piece of work together to deliver our projection by a globally accessible url endpoint.

There are even more settings, services, and resources Elastic Beanstalk configuring and provisioned for us that we did non discuss, but for at present yous've gained a good start stride into the benefits of a cloud hosting provider and a few of the fundamental AWS services.

How to Deploy Your freeCodeCamp Project with AWS Lambda

For our last function of this post, nosotros are going to deploy our code to a serverless environment. Serverless infrastructures are becoming increasingly popular and preferred over a dedicated on-premise server or even a hosted server (like EC2).

Not only is the serverless route more cost-constructive, information technology lends itself to a unlike software architectural approach: microservices.

To gain an introduction into the serverless earth, we are going to deploy our code via AWS'southward serverless service called Lambda and another AWS service, API Gateway. Let's get started!

What is Serverless?!

Say, what?! This whole time nosotros've been talking nigh servers delivering our lawmaking when the web browser requests it, and so what's serverless mean?

To offset off, information technology doesn't mean there is no server. There has to be one, since a server is a computer and nosotros need our program running on a calculator.

So, serverless doesn't mean no-server. It means you and I, the code deployers, do not ever see the server and have no configurations to set for that server. The server belongs to AWS, and it but runs our code without u.s. doing anything else.

Serverless means that for you and me in all practicality there is no server, but in reality there is.

Sounds pretty unproblematic, right? Information technology is! We have no server to configure, AWS has the server and handles everything. Nosotros just hand over the code.

You might exist asking yourself, how is that different from EC2? Expert question.

There's Less Configuration

Practice you remember all the configuration options that we could mess with on Elastic Beanstalk, and in the EC2 management console, and the Motorcar Scaling Group, and the Load Balancer?

Then, Elastic Beanstalk created more than resources all with their own configurations, like the Load Balancer and Auto Scaling Group.

Well, every bit overnice as it is that we tin can configure those, it tin as well be a pain to setup. More importantly, information technology takes more time to setup, which takes us abroad from time spent developing our actual application.

With a Lambda (an AWS serverless service), we just say, " hey, nosotros desire a Node.js environment to run our code ", and then after that there is no more configurations to make virtually the server itself. We can become dorsum to writing more code.

It's Cheaper

Also, that EC2 is costing us money for as long as it runs. Now information technology'southward simple to terminate or temporarily stop to salve us coin, and is usually cheaper than purchasing your own physical server – merely information technology still costs money at all times of the day that information technology's running, fifty-fifty if no one is trying to reach your website.

So, what if there was an selection that just charged u.s.a. a fee for the fourth dimension the lawmaking ran? That'southward serverless!

With AWS Lambda, we accept our code able to be run at whatsoever time, it's sitting on a server. Just it only runs our program when asked for and nosotros are only paying for those times it runs. The cost savings are huge.

Microservice Architecture

Because almost programs have been written and deployed onto a server to run, all the code for that application has been bundled together so that that server could access and run the entire application. That makes sense!

But, if you have a manner to run code with a serverless approach, which just runs that code asked for, you could break up that 1 application into many applications. That breaking autonomously an awarding into smaller sub-applications is the thought of microservices.

One of the principal benefits of a microservice arroyo is the update process. If we have a monolithic application (i that is all arranged together on an EC2), and need to update ane small piece of code, then we have to redeploy the unabridged application, which may mean service interruptions.

Alternatively, a microservice approach ways nosotros only update the minor-application of the whole that deals with that small piece of lawmaking. This is a less abrupt approach to deployment, and 1 of the main benefits of a microservice approach that AWS Lambda allows us to practice.

I should note that serverless has its own drawbacks as well. For starters, if you lot take a microservice architecture via serverless, then the integration of those microservices to talk to each other requires extra piece of work that a monolithic awarding doesn't have to deal with.

That being said, it's an approach that is massively popular and growing in use, so it'due south worth your knowing.

Disclaimer: At present, technically Role 1'south utilise of S3 was a serverless deployment, but typically when people discuss serverless and AWS they're talking well-nigh Lambda.

How to Go Started with AWS Lambda

So, Lambdas permit us to execute code without worrying about server configuration or cost. That'south information technology. They run on a server owned by AWS, configured past AWS, managed by AWS, and never seen by us.

For the most part, all we control and care about is the function. This is what's referred to as function every bit a service in cloud computing. It lets us focus on code without needing to recall about the complexities of infrastructure.

And then now that we know that Lambdas are but functions running on an AWS server that we don't have to configure, let's get started with ours.

How to Create Our Lambda

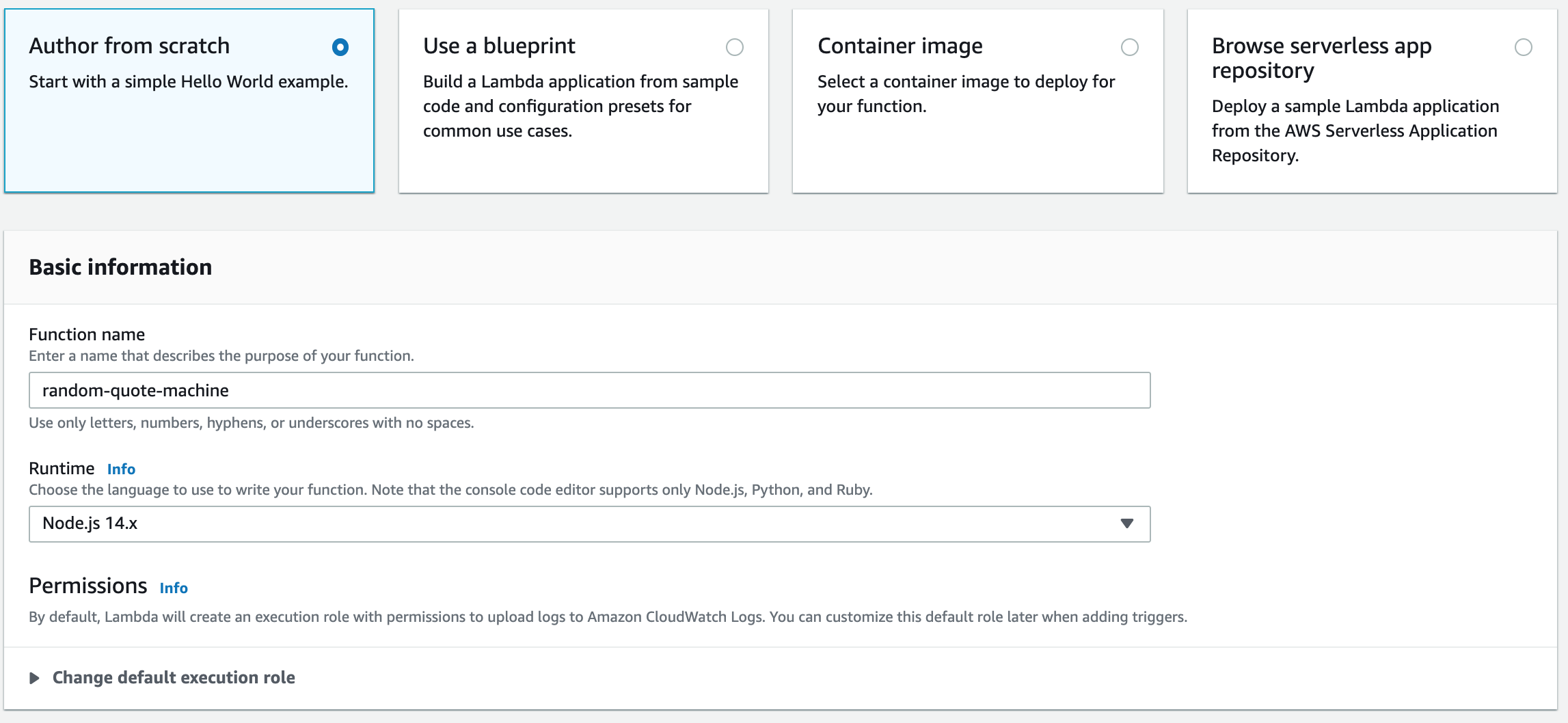

Caput over to the AWS Lambda Management Console. Click on the Create Office button. On the adjacent screen, nosotros are going to proceed the Writer from scratch choice, enter our function'southward proper noun (aka, the name for our Lambda), and then select the Node.js 14.x runtime.

That's all we demand to exercise for now, go ahead and click the Create Part button in the bottom correct.

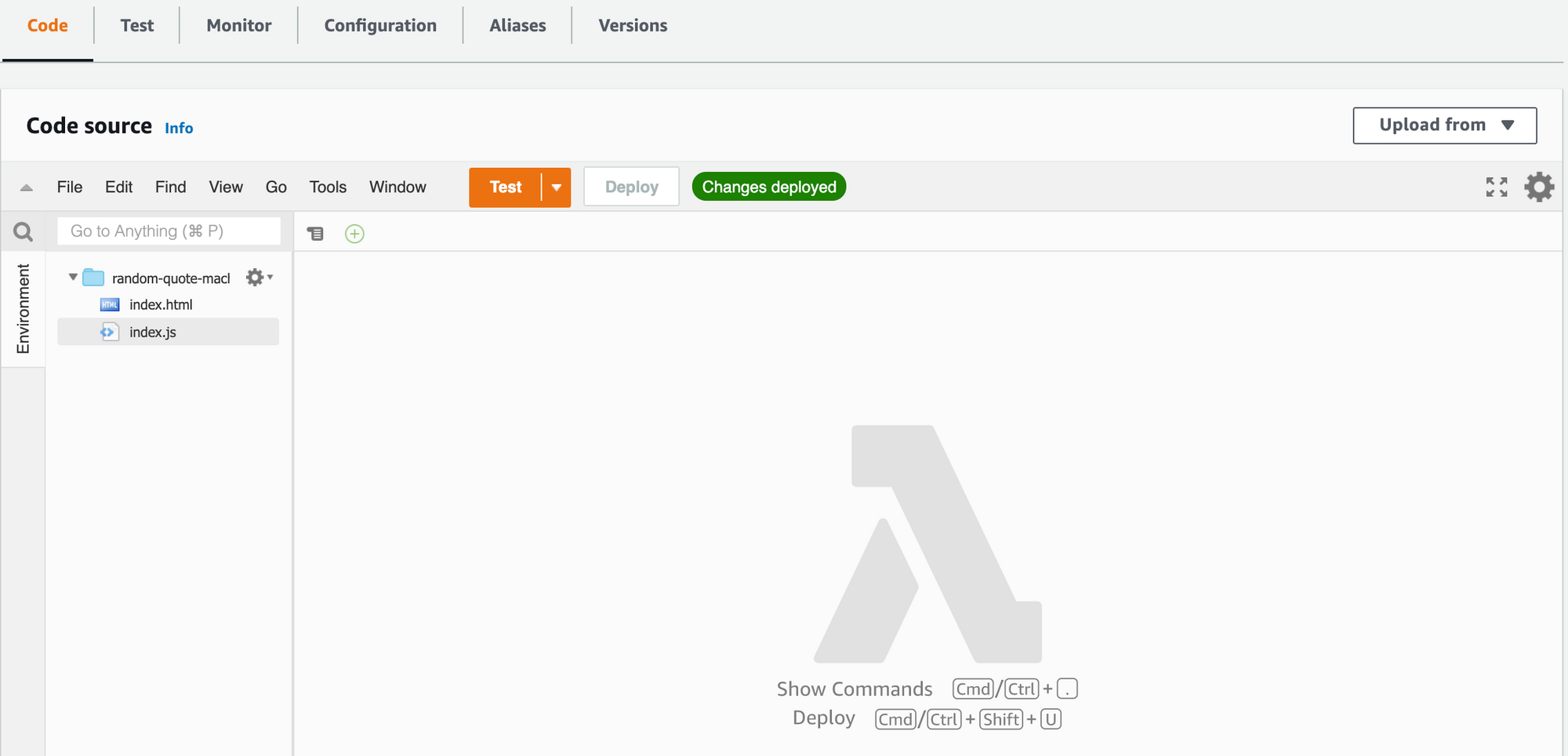

The next screen that loads will be our panel for managing a Lambda. If you scroll down a bit you'll discover an embedded code editor and file system. A directory (aka folder) with the name of your Lambda is already in that location along with an alphabetize.js file. Click File and create a new file chosen alphabetize.html.

Adapt Your Project's Code

Due to the nature of this deployment, we need to adjust our lawmaking a little bit. Instead of using the <link href="styles.css" /> tag and the <script src="chief.js"></script> tag in our freeCodeCamp projection's index.htm l that links our .css and .js code to our HTML code, we are going to add them directly into the HTML file.

If we don't do this, then when we try to open our app, it will look for those files at a different URL road than we want.

To change the lawmaking to work for united states in Lambda, do the following:

- Replace the

<link href="styles.css"/>line in your index.htm 50 file with<way></style>(this should be correct above your<body>opening tag) - Open up your

styles.cssfile, copy all of the CSS, and paste information technology between the 2<fashion>tags (like<style> your pasted code </fashion>). - Do the aforementioned thing with our

principal.js– remove thesrc="main.js"within the opening<script>tag. - Open up your master.js file, copy all of your JS code, and paste it between the two

<script>tags at the bottom of our index.html file (ie:<script> your principal.js code <script>)

In one case completed, open your alphabetize.htm l locally in the web browser to brand sure your project still works before moving on.

Add together Our Projection'due south Code to Our Lambda

With our updated index.html page working, head back to our Lambda panel. There, paste all of your index.html code into the index.html file you created in the Lambda. Double bank check to make certain you copied/pasted all of your code.

Update index.js Lambda File

Okay, we have our index.html, but, just like with the Limited.js app nosotros fabricated in the ElasticBeanstalk deployment, we need to tell the Lambda where that file is that we want delivered to the web browser.

Re-create and paste the following code into your Lambda's index.js file, so we'll discuss information technology.

const fs = require('fs'); exports.handler = async (event) => { const contents = fs.readFileSync(`alphabetize.html`); render { statusCode: 200, body: contents.toString(), headers: {"content-type": "text/html"} } }; The top of this code should look familiar to you if yous completed the ElasticBeanstalk deployment. Nosotros're importing a node module called fs, which is short for file system. Information technology lets us traverse our file system, in our case to find our index.html file.

You'll notice the exports.handler function, that is an out-of-the-box office given to united states of america that this Lambda is configured to look for.

When this Lambda is triggered (more on that in a 2nd), it looks for the designated file and function proper noun to run. When nosotros clicked Create Function, it came pre-configured to look in the index.js file for the handler function.

We could alter this if we want to, only for at present it'south good enough to know that this handler function is our Lambda office.

The function reads our alphabetize.html files, assigns it to a variable chosen contents, and then returns it.

The manner it returns it is specific for an API asking. That'south how our Lambda is going to exist triggered. Past opening the projection in a browser the browser is making a Get request to an endpoint which will trigger this Lambda to return the alphabetize.html to the browser.

Let's become create our API to trigger our Lambda to render the index.html file to the browser.

Important: Before nosotros movement on, click on the Deploy Changes push button. This saves our Lambda.

AWS API Gateway

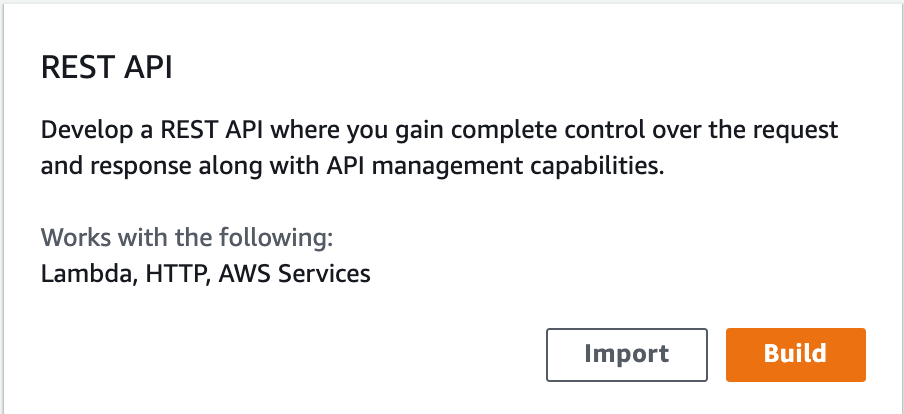

In the AWS services search bar, type API Gateway, and open the link. Y'all're now in the API Gateway console, and we desire to click on Create API.

Next, select the Balance API choice and click Build.

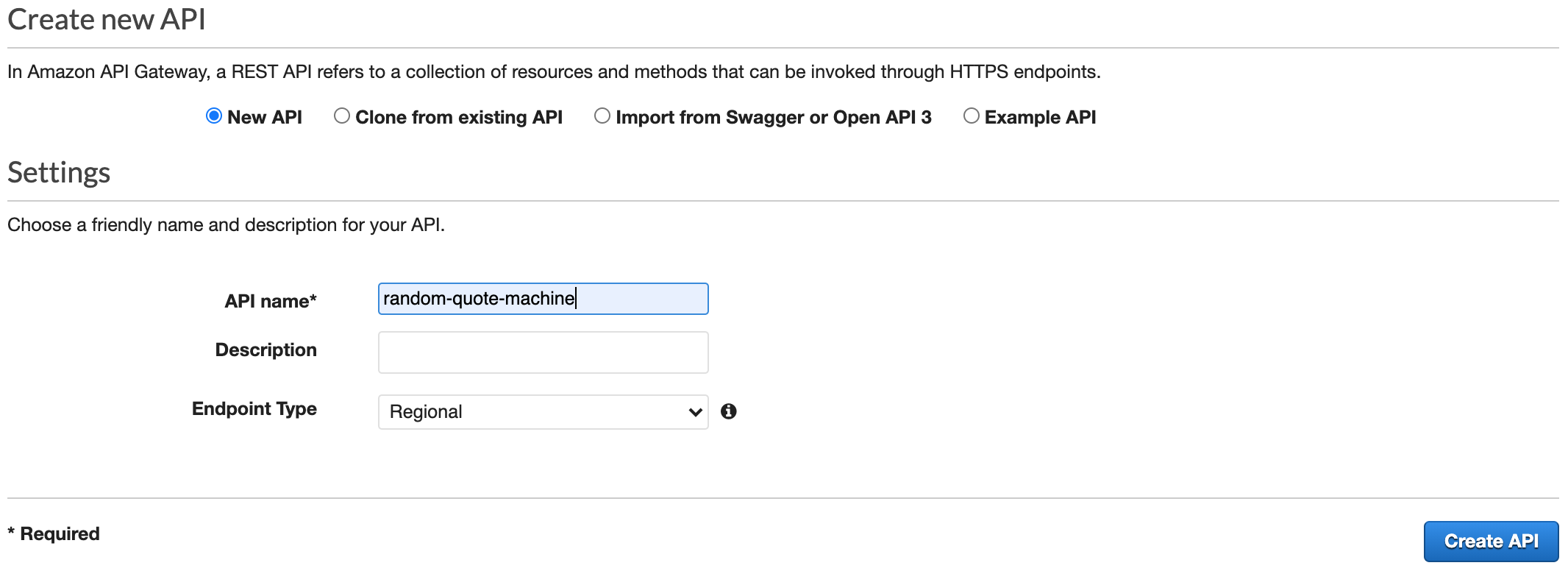

Make full in the name of your API, add a description if you'd similar, and then click Create API.

After y'all create the API you'll be redirected to your management console for this API. Now all we demand to practise is create an API endpoint for triggering our Lambda.

Create our GET Method

If you're unfamiliar with API methods, they are verbs that describe the activeness taking place. Our web browser is trying to GET the index.html file, and this API endpoint will be able to with the help from our Lambda.

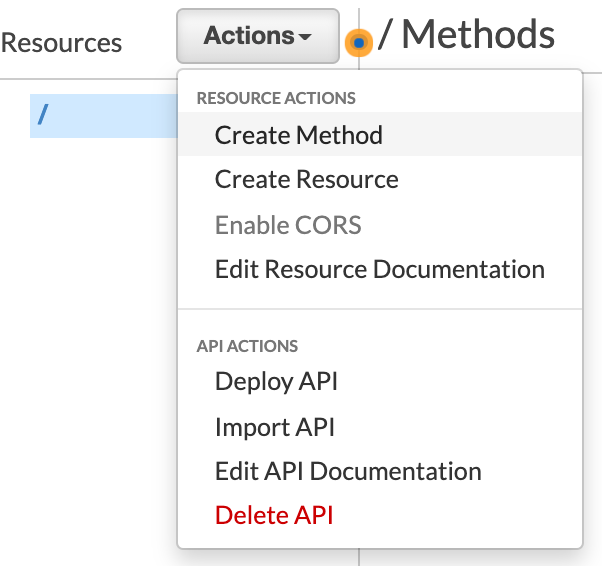

So, we need to create a Go method on our API. To do this, click on Actions so select Create Method.

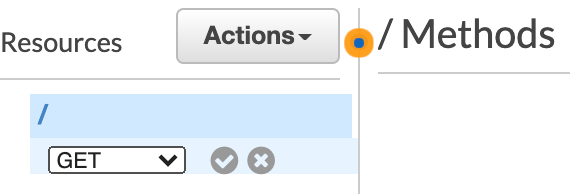

Then, select Get and click the check box.

Select our Lambda as the Trigger

Now we have our GET method on our API, and we can attach the Lambda to exist triggered when this method is hit.

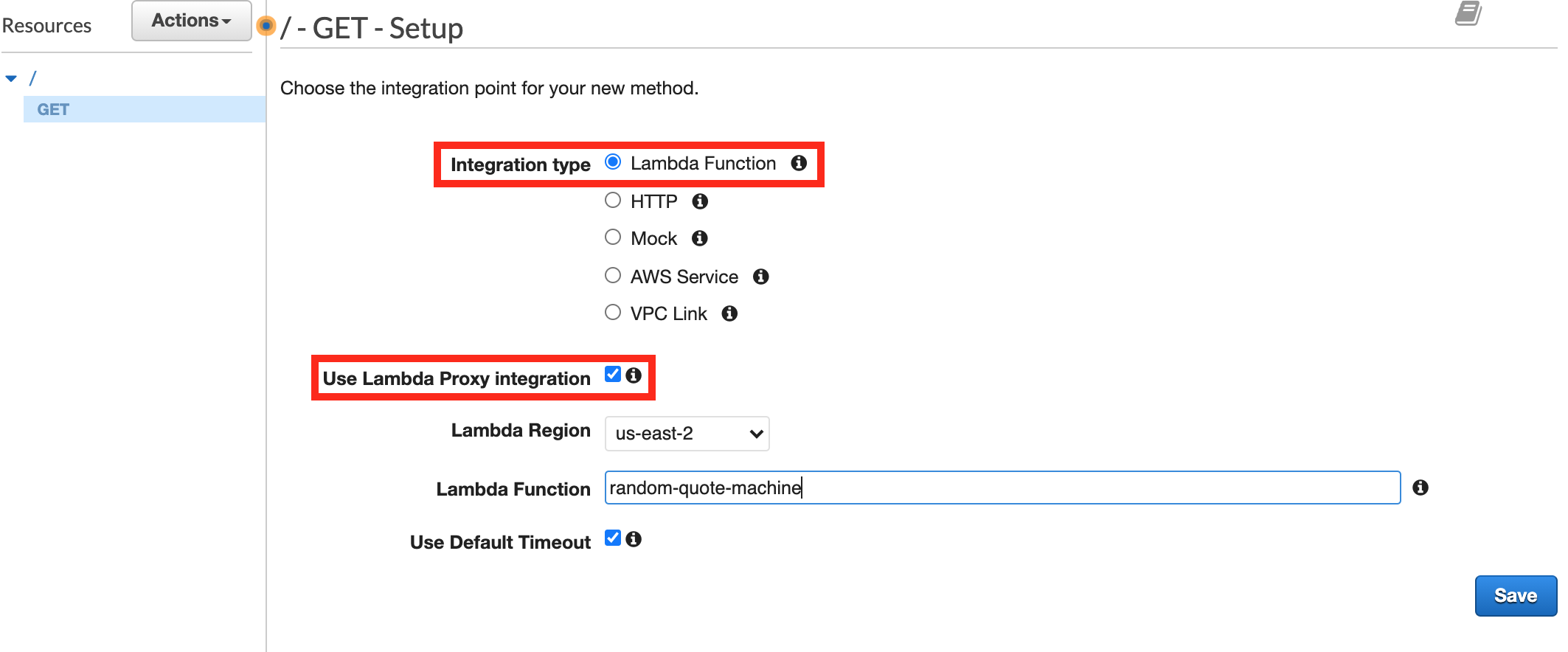

Click on the newly created GET method in your resources list. You'll and so see a form for creating the method integration. This is us pairing our Lambda to this API endpoint.

Our integration should be:

- Integration Type: Lambda Function

- Apply Lambda Proxy Integration: enabled

- Lambda Function: the name of your Lambda

- Utilise Default Timeout: enabled

Y'all need to type the get-go letter of your Lambda for it to populate. If you lot're not seeing information technology populate, then your Lambda might be in a different Lambda Region.

In that example, navigate to your Lambda, look in the top right of your screen to view the region (like Ohio, which is us-east-ii) and and then select the advisable region in this step once more.

Click the Salve push. Now the terminal thing to practice is to deploy our API Gateway so that this add-on of our GET method triggering our Lambda are live.

Deploy API Gateway

Click on the Actions drib-down again, the aforementioned driblet-downwards nosotros used to create our GET method.

Select the Deploy API option.

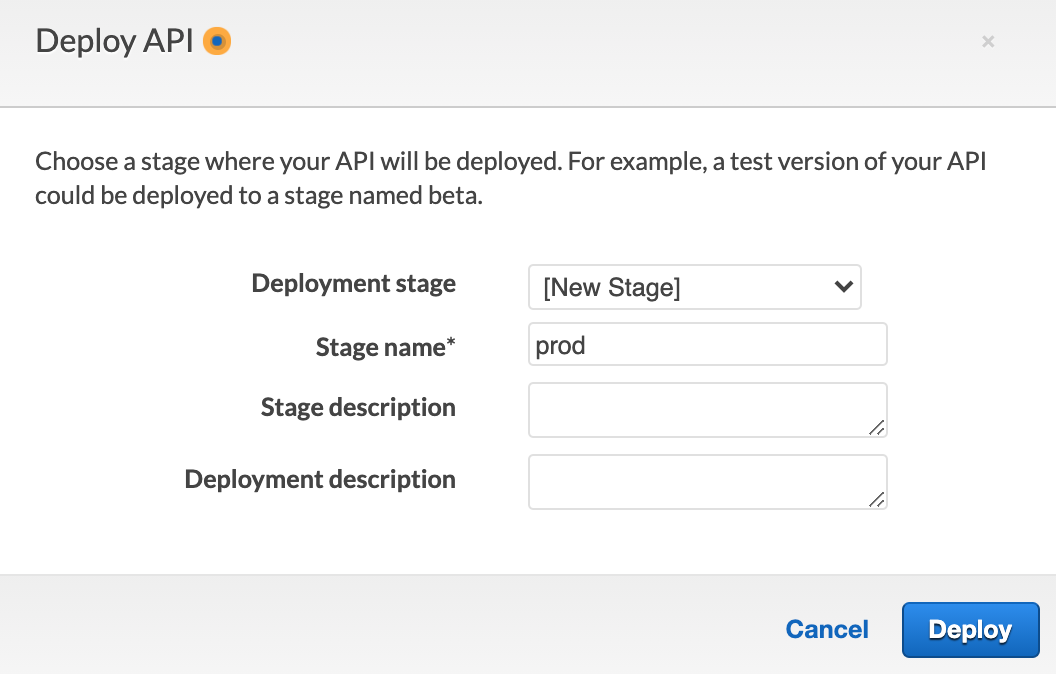

In the Deployment Phase drop-down, select [New Stage].

Enter a stage proper noun, like prod , a description if you lot'd like, and and then click Deploy.

That's information technology! We're done. At present permit's get test it out.

Run Information technology!

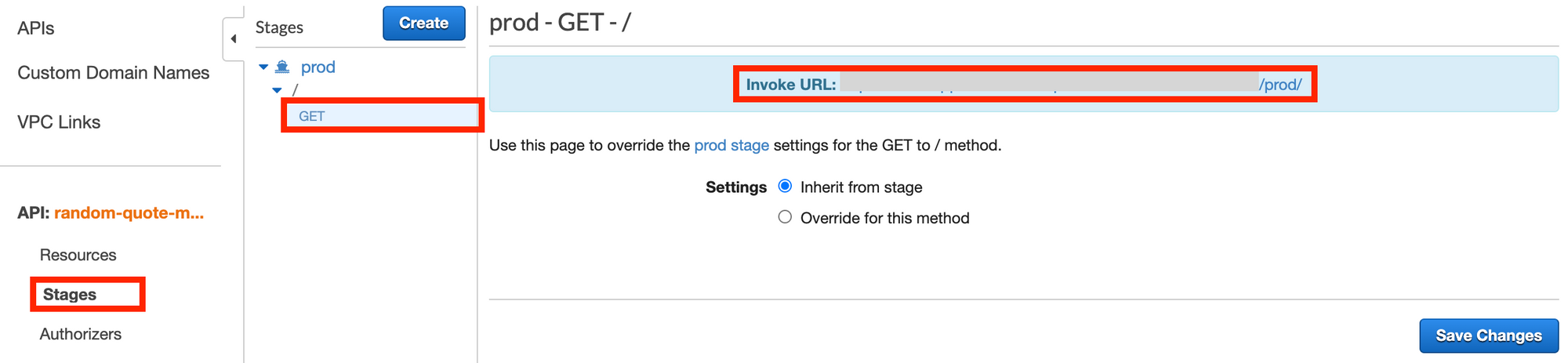

Just like S3 and Elastic Beanstalk gave us an endpoint to view our project, API Gateway gives united states i too – though this time we participated in helping create the endpoint past designating the Become method and name of our deployment phase.

To view your endpoint, on the left navigation menu select Stages, and then you lot should see the name of your stage in the box to the correct. Expand your stage, and click on the Go method.

!! Make SURE Yous CLICK ON THE Become METHOD TO Get THE Right URL !!

To the right you lot'll now see Invoke URL and if yous click on it you lot'll be directed to your project.

Congratulations! Yous should now run across your projection existence launched from your third deployment arroyo using AWS.

If you are having bug seeing your project working, here are a few things to double check:

- Did you click on Deploy Changes in your Lambda after we added our index.html and edited index.js?

- Did you click the Use Lambda Proxy Integration when we created our Become method? (yous'll take to delete the method and recreate to make certain)?

- Is your project's code correctly copied into the

<manner>and<src>tags?

Apply Cases for AWS Lambda

Lambda is, in my opinion, the near versatile AWS resource nosotros can use. The use cases for Lambda are seemingly endless. With it nosotros tin can:

- Deliver our front-stop (like we only did)

- Do existent-time processing

- Process objects (like images, videos, documents) uploaded to S3

- Automate tasks

- Build a serverless backend for an application

- and many more than!

Determination

Congratulations on reaching the end of this tutorial! By at present you should have a basic understanding of the primary AWS resources and services: S3, EC2, Auto Scaling Group, Load Balancer, API Gateway, CloudWatch, and Lambda.

Y'all've taken a giant stride forward in understanding how to deploy projects you create on freeCodeCamp and beyond. I hope y'all found this information useful, and if you take any comments, questions, or suggestions delight don't hesitate to accomplish out!

Learn to code for free. freeCodeCamp's open up source curriculum has helped more than 40,000 people get jobs as developers. Get started

Source: https://www.freecodecamp.org/news/how-to-deploy-your-freecodecamp-project-on-aws/

0 Response to "Uploading Source Code to Be Run on Aws"

Post a Comment